Hackernoon

1d

3

Image Credit: Hackernoon

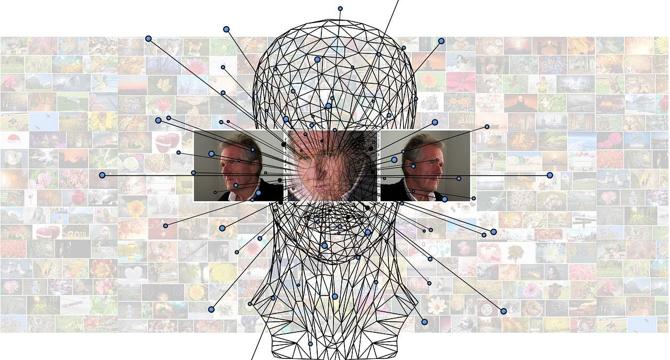

As AI Advances, Researchers Push for Models That Reason Like Humans

- As AI models become more powerful, they are also becoming harder to understand, leading to a focus on explainable AI (XAI) to keep up with advancements.

- Efforts to explain complex AI models like large language models (LLMs) and generative tools face challenges due to their non-rule-based nature and high-dimensional operations.

- Explainability in AI is crucial for accountability, requiring considerations such as fairness, transparency, and accountability beyond just technical aspects.

- There is an ongoing shift towards developing AI models that reason more like humans, emphasizing concepts like Concept Activation Vectors, counterfactuals, and user-centered design for better understanding and alignment with human reasoning.

Read Full Article

Like

For uninterrupted reading, download the app