Medium

1d

343

Image Credit: Medium

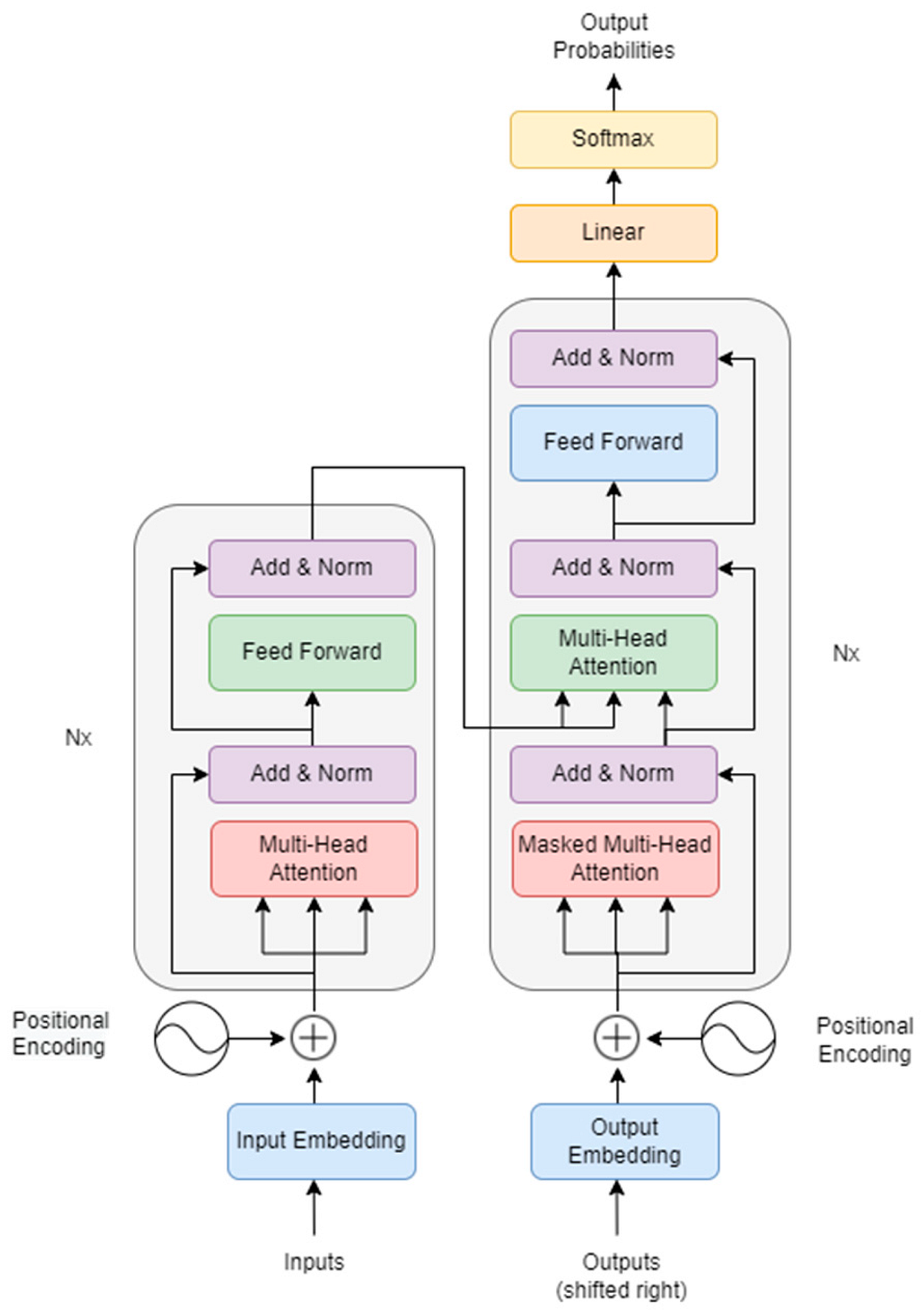

BERT (Bidirectional Encoder Representations From Transformers)

- BERT, standing for Bidirectional Encoder Representations From Transformers, is a deep learning architecture developed by Google in 2018.

- BERT reads text bidirectionally, meaning it considers words in both directions simultaneously, unlike traditional NLP models.

- The uniqueness of BERT lies in its ability to understand word meanings in context by analyzing surrounding words, making it revolutionary for NLP tasks.

- BERT is based on transformer architecture and includes components like token embedding, positional encodings, multi-head self-attention, and stacked layers for language understanding.

Read Full Article

20 Likes

For uninterrupted reading, download the app