Medium

1M

81

Image Credit: Medium

Beyond the Curve: The Magic of Sigmoid + Code

- The AND gate is a basic digital logic gate that implements logical conjunction (∧) from mathematical logic.

- The activation function in an artificial neural network (ANN) plays a critical role in determining the output of a neuron.

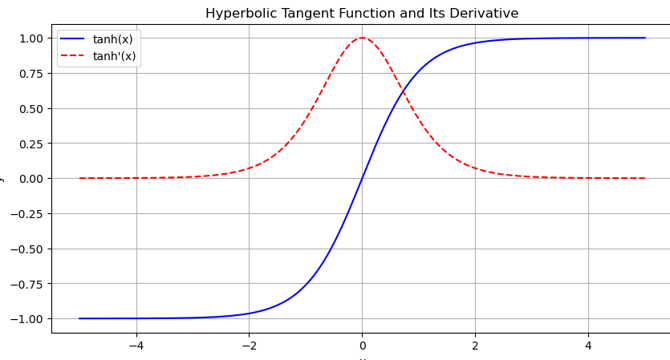

- Activation functions like ReLU, Sigmoid, and Tanh introduce non-linearities, enabling the network to learn complex patterns.

- Activation functions like Sigmoid and Tanh are differentiable, meaning that their derivatives can be used in backpropagation to update the network’s weights during training.

- The sigmoid function is one of the most well-known and widely used for classification tasks.

- Sigmoid function is especially valuable in binary classification tasks, where we predict between two classes (e.g., yes/no, 0/1).

- The sigmoid function as we can see is an s-shaped curve. For any value of x the sigmoid function will output a value between 0 and 1.

- This compression property makes sigmoid useful for models that require output in a [0, 1] range, especially for binary classification.

- While sigmoid can work well as an output activation in binary classification, it’s generally not recommended for hidden layers in deep networks.

- For hidden layers, the hyperbolic tangent (tanh) function is often preferred over sigmoid.

Read Full Article

4 Likes

For uninterrupted reading, download the app