Medium

1w

157

Image Credit: Medium

Bridging AI and Neuroscience: Insights into Natural Language Processing

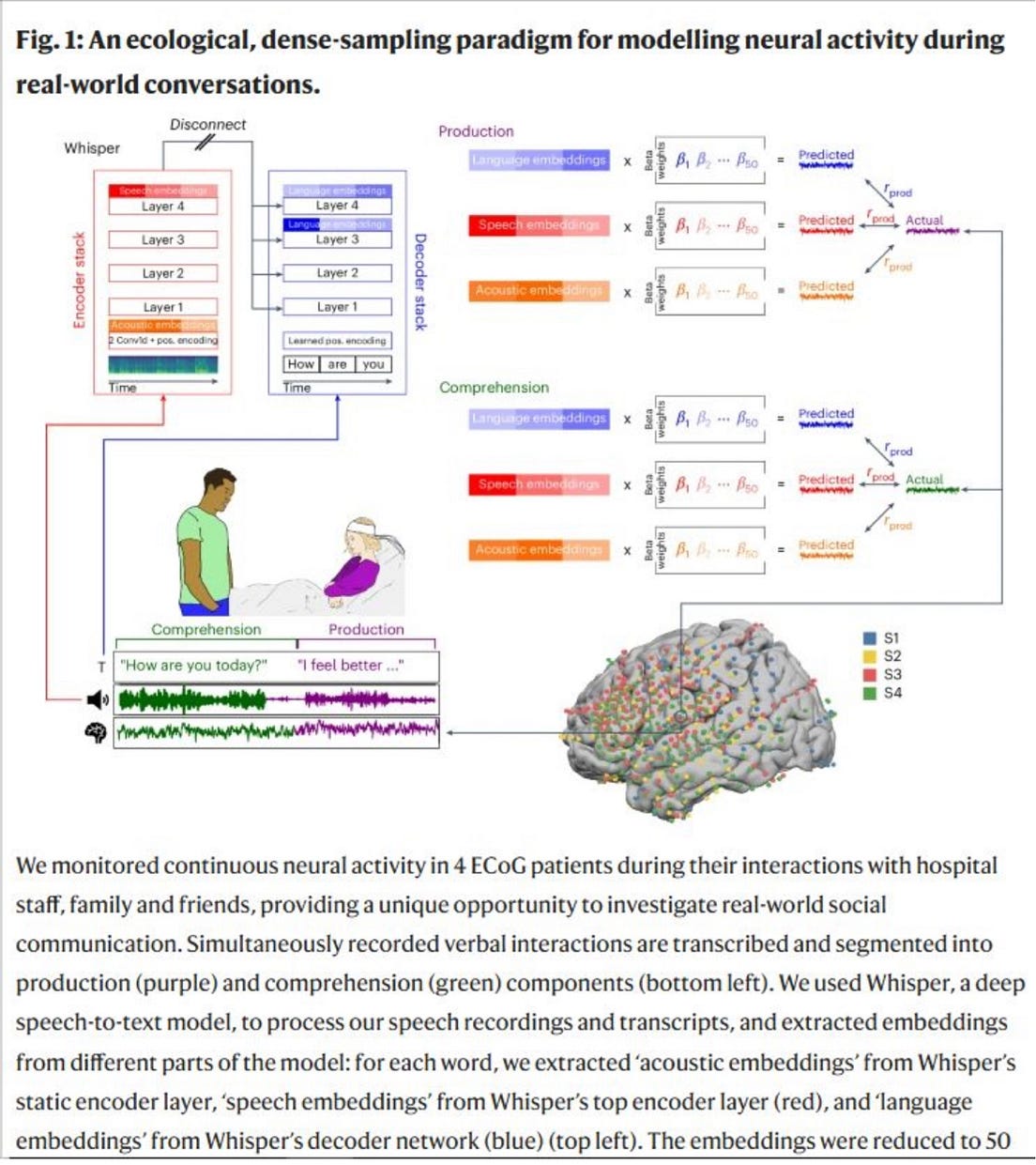

- Researchers introduce a computational framework that connects acoustic signals, speech, and word-level linguistic structures to study the neural basis of real-life conversations.

- Using electrocorticography (ECoG) and the Whisper model, they map the model's embeddings onto brain activity, revealing alignment with the brain's cortical hierarchy for language processing.

- The study demonstrates the temporal sequence of language-to-speech and speech-to-language encoding captured by the Whisper model, outperforming symbolic models in capturing neural activity associated with natural speech and language.

- The integration of AI models like Whisper offers new avenues for understanding and potentially enhancing human communication through technology.

Read Full Article

9 Likes

For uninterrupted reading, download the app