Amazon

4d

215

Image Credit: Amazon

Build multi-agent systems with LangGraph and Amazon Bedrock

- Large language models (LLMs) have revolutionized human-computer interaction, requiring more complex application workflows and coordination of multiple AI capabilities for real-world scenarios like scheduling appointments efficiently.

- Challenges with LLM agents include tool selection inefficiency, context management limitations, and specialization requirements, which can be addressed through a multi-agent architecture for improved efficiency and scalability.

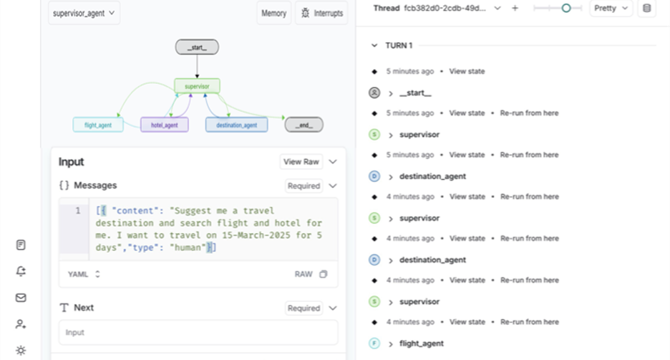

- Integration of open-source multi-agent framework LangGraph with Amazon Bedrock enables the development of powerful, interactive multi-agent applications using graph-based orchestration.

- Amazon Bedrock Agents offer a collaborative environment for specialized agents to work together on complex tasks, enhancing task success rates and productivity.

- Multi-agent systems require coordination mechanisms for task distribution, resource allocation, and synchronization to optimize performance and maintain system-wide consistency.

- Memory management in multi-agent systems is crucial for efficient data retrieval, real-time interactions, and context synchronization between agents.

- Agent frameworks like LangGraph provide infrastructure for coordinating autonomous agents, managing communication, and orchestrating workflows, simplifying system development.

- LangGraph and LangGraph Studio offer fine-grained control over agent workflows, state machines, visualization tools, real-time debugging, and stateful architecture for multi-agent orchestration.

- LangGraph Studio allows developers to visualize and debug agent workflows, providing flexibility in configuration management and real-time monitoring of multi-agent interactions.

- The article highlights the Supervisor agentic pattern, showcasing how different specialized agents collaborate under a central supervisor for task distribution and efficiency improvement.

Read Full Article

12 Likes

For uninterrupted reading, download the app