The New Stack

1M

365

Image Credit: The New Stack

Build Scalable LLM Apps With Kubernetes: A Step-by-Step Guide

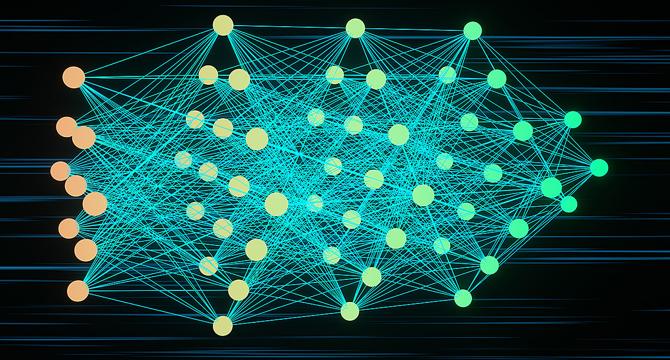

- Large language models (LLMs) like GPT-4 have revolutionized AI applications in various sectors by enabling advancements in natural language processing, conversational AI, and content creation.

- Deploying LLMs effectively in real-world scenarios poses challenges due to their demanding computational requirements and the need for scalability and efficient traffic management.

- Kubernetes, a leading container orchestration platform, offers a dynamic framework for managing and scaling LLM-based applications in a cloud-native ecosystem, ensuring performance and flexibility.

- This step-by-step guide focuses on deploying and scaling LLM-powered applications using Kubernetes, essential for transitioning AI models from research to production environments effectively.

- The process involves containerizing LLM applications, deploying them on Kubernetes, configuring autoscaling for fluctuating demands, and managing user traffic for optimal performance.

- Key prerequisites include basic Kubernetes knowledge, Docker installation, a Kubernetes cluster set up, and OpenAI and Flask installation in the Python environment to create LLM applications.

- Steps include creating an LLM-powered application in Python, containerizing it using Docker, building and pushing the Docker image, deploying the application to Kubernetes, configuring autoscaling, and monitoring/logging the application.

- Further enhancements for handling advanced workloads include using service mesh, implementing multicluster deployments, and integrating CI/CD automation.

- Overall, building and deploying scalable LLM applications with Kubernetes can be complex but rewarding, empowering organizations to create robust and production-ready AI solutions.

- Kubernetes' features like autoscaling, monitoring, and service discovery equip applications to handle real-world demands effectively, allowing for further exploration of advanced enhancements.

Read Full Article

21 Likes

For uninterrupted reading, download the app