Medium

4w

134

Image Credit: Medium

Building Neural Networks Manually from Scratch: A Beginner’s Hello World

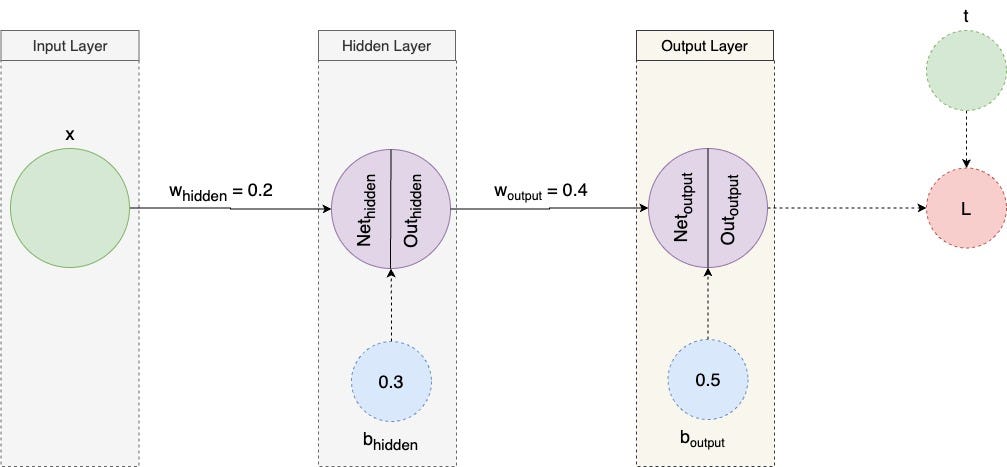

- The article explains how to build a neural network from scratch, step-by-step and without shortcuts.

- The process of training a neural network involves calculating errors and adjusting guesses based on those errors.

- A learning rate is used to control the size of adjustments, preventing overshoot and zigzagging.

- Activation functions are essential for neural networks, allowing them to model complex patterns.

- Bias is used to adjust the baseline of a network, enabling it to capture patterns that don't naturally pass through the origin.

- The article provides an example of building a neural network to approximate the function y = x + 1.

- The article explains forward and backpropagation, which respectively involve making predictions and learning from mistakes to adjust the network's parameters.

- The article also explains derivatives and the chain rule which are crucial to calculating gradients and improving a neural network's predictions.

- The code for a simple neural network is provided on GitHub for readers to experiment with.

Read Full Article

8 Likes

For uninterrupted reading, download the app