Medium

1M

332

Image Credit: Medium

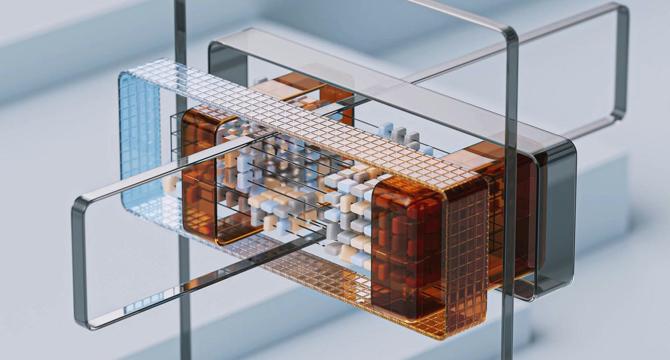

Building the Muon Optimizer in PyTorch: A Geometric Approach to Neural Network Optimization

- The Muon optimizer in PyTorch offers a new approach to neural network optimization, focusing on a geometric perspective.

- Muon stands out by considering how weight matrices impact a network's behavior, setting speed records for NanoGPT and CIFAR-10.

- It measures vectors and matrices using RMS norms, controlling the influence of weights for stable training.

- Muon optimizes weight updates by standardizing all singular values to 1 through a polynomial approximation method.

- The update rule of Muon subtracts a scaled, orthogonalized version of the gradient for consistent behavior across layers.

- Implementation of Muon in PyTorch involves defining the optimizer class and enhancing features for practical usage.

- Muon's geometric perspective offers advantages like automatic learning rate transfer and principled parameter updates.

- It transforms neural networks into well-understood mathematical systems and simplifies hyperparameter tuning across different architectures.

- Muon's success suggests a future trend towards geometric optimization methods in the field of deep learning.

- Implementing Muon in PyTorch makes it accessible to the deep learning community, encouraging experimentation and contributions.

Read Full Article

20 Likes

For uninterrupted reading, download the app