Medium

1M

217

Image Credit: Medium

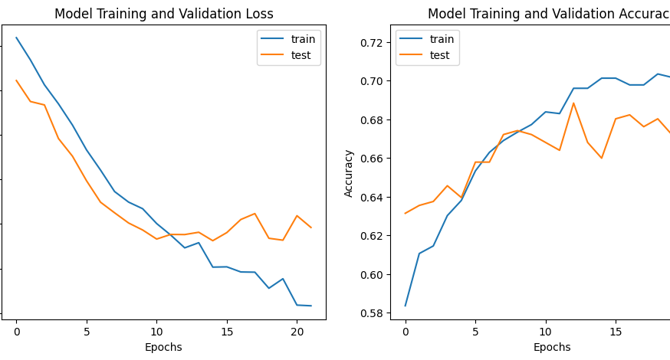

Deep Learning 101: Optimisation in Machine Learning

- Optimisation techniques are algorithms and methods used to adjust the parameters of a model to minimise the difference between the predictions and the actual values.

- Feature scaling involves transforming the range of input variables to ensure that they are on the same scale.

- Batch normalisation helps to stabilise the learning process and allows for the use of higher learning rates.

- Different optimisation techniques like mini-batch gradient descent, momentum, RMSProp, Adam, and learning rate decay have their own advantages and disadvantages.

Read Full Article

13 Likes

For uninterrupted reading, download the app