Mit

4d

339

Image Credit: Mit

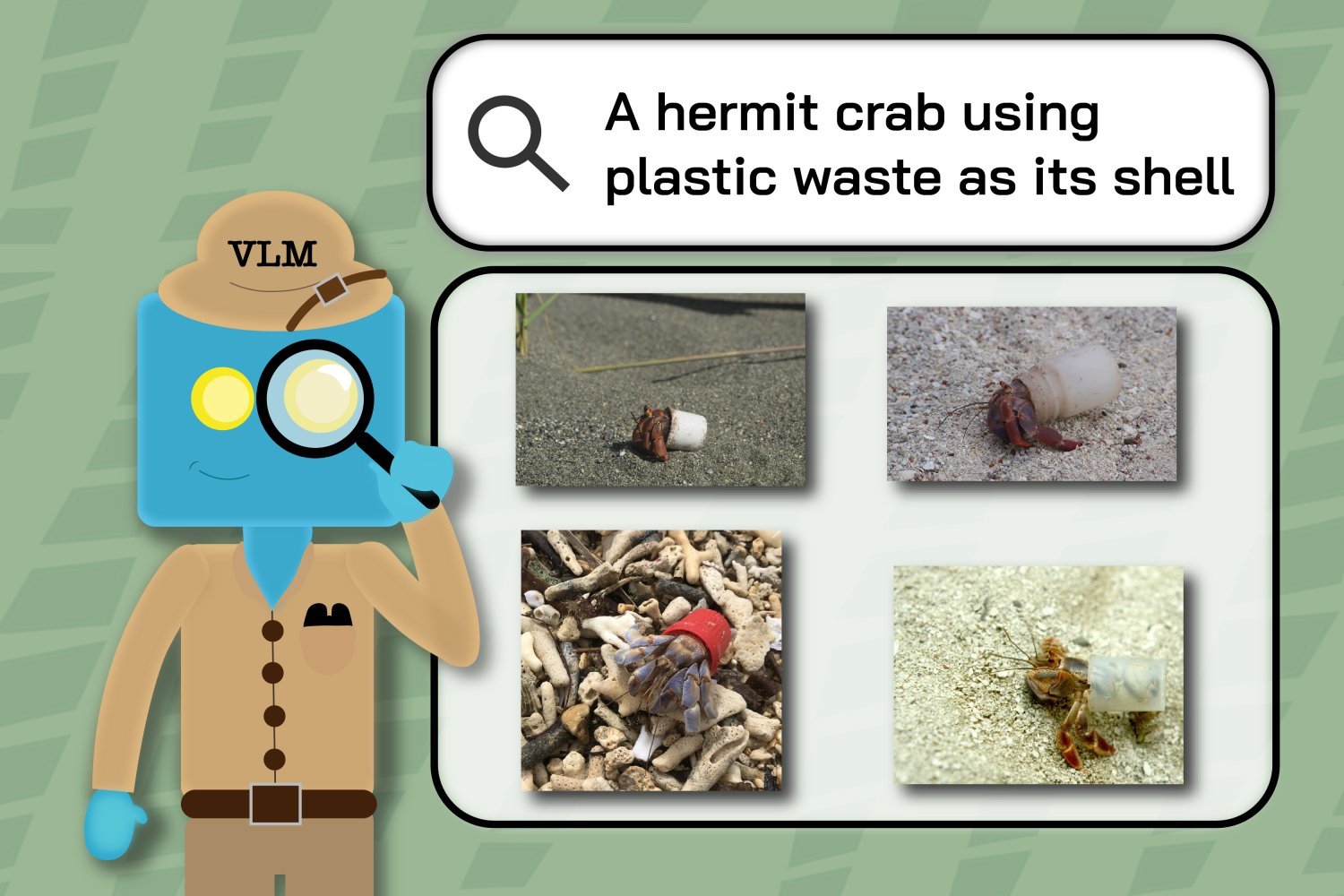

Ecologists find computer vision models’ blind spots in retrieving wildlife images

- Computer vision models, called multimodal vision language models (VLMs), are being evaluated for use in assisting ecologists retrieve relevant images for research. But a team of ecologists from MIT and University College London found that while VLMs performed reasonably well on straightforward queries, they struggled with identifying the specific biological conditions or behaviours requested by experts. The team's INQUIRE data set of five million wildlife images and 250 search prompts was used to evaluate the efficacy of the algorithms.

- The most advanced VLMs performed better at narrowing search results. However, more domain-specific training data for wildlife ecological searches is needed. The dataset has also proved useful for researchers wanting to analyse large image collections in observation-intensive fields, reports MIT News.

- Such datasets offer a great research tool for biology, ecology and environmental science experts. They provide evidence of organisms' behaviours, migration patterns and responses to pollution and climate change.

- VLMs are trained on both text and images and can identify finer details, such as the specific trees in the background of a photograph. But ecologists need more sophisticated image retrieval algorithms.

- MIT PhD student Edward Vendrow and colleagues hope that by familiarising VLMs with more informative data specific to biodiversity, such as the 33,000 carefully annotated nature photographs in the INQUIRE data set, they will help researchers find the exact images they need.

- Vendrow and his colleagues are also working on a query system to better help researchers and other users filter their searches by species and to improve the re-ranking system.

- The team's work is supported by the US National Science Foundation/Natural Sciences and Engineering Research Council of Canada Global Center on AI and Biodiversity Change, a Royal Society Research Grant, and the Biome Health Project funded by the World Wildlife Fund United Kingdom.

Read Full Article

20 Likes

For uninterrupted reading, download the app