Medium

4d

194

Image Credit: Medium

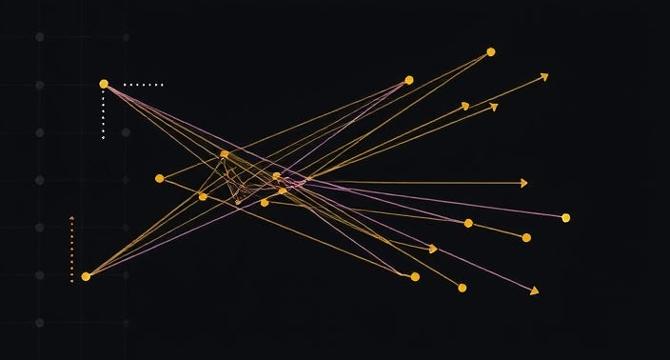

Embeddings Unveiled: The Hidden Language of Data Science (with python examples)

- Embeddings solve the problem of traditional approaches to data representation.

- There are various approaches for embedding models like Word2Vec, GloVe, FastText.

- LSA is a widely-used approach that uses Singular Value Decomposition (SVD) for dimensionality reduction.

- Probabilistic Topic Modeling (PTM) is a popular technique that uses generative models to determine the latent topics in a corpus of documents.

- ELMo uses a bidirectional language model (BiLM) to create dynamic and contextualized embeddings for words.

- BERT is a pretrained language model designed to understand the context of a word in relation to the words surrounding it.

- GPT is primarily designed for text generation tasks and uses the decoder part of the Transformer architecture.

- T5 is a model that treats every NLP task as a text-to-text problem.

- GPT-3 is one of the largest and most influential foundation models in NLP and is autoregressive.

- PaLM is built on the Pathways architecture, which enables it to learn from a more diverse set of tasks.

- LaMDA is specially designed for dialogue generation and training to improve the quality of conversation in open-ended dialogues.

Read Full Article

11 Likes

For uninterrupted reading, download the app