Bioengineer

21h

26

Image Credit: Bioengineer

Exploring Feature Group Insights in Tree-Based Models: A New Perspective

- Tree-based models have gained popularity in machine learning for their flexibility and accuracy, impacting diverse fields like finance and healthcare.

- Challenges exist in understanding how these models make decisions, emphasizing the need for interpretability, especially in critical domains.

- Traditional interpretation methods focusing on individual feature importance often oversimplify complex feature interdependencies.

- Research by Wei Gao's team introduces a methodology emphasizing feature group importance to enhance model interpretability.

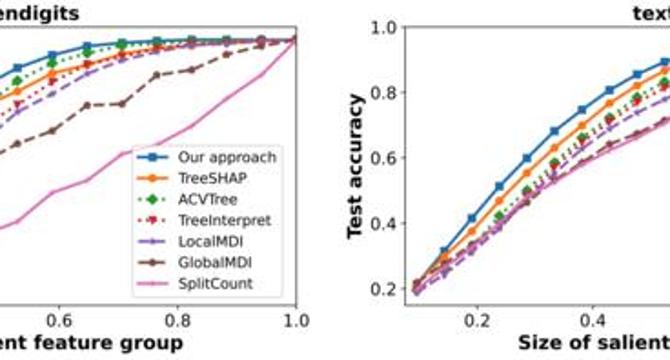

- Their breakthrough, the BGShapvalue metric, evaluates feature groups' collective impact, improving interpretative capabilities of tree models.

- The BGShapTree algorithm efficiently computes BGShapvalues, identifying influential feature groups for model predictions.

- Experimental validation confirms the practicality of BGShapvalue and BGShapTree across various datasets, offering insights for future model interpretability enhancements.

- The research team plans to extend their methodology to more complex tree models like XGBoost, addressing the need for scalable interpretability solutions in AI.

- Efforts to develop efficient strategies for evaluating feature groups aim to promote broader adoption of interpretable machine learning models.

- The work by Wei Gao's team not only advances technical aspects but also upholds ethical principles of fairness and transparency in AI applications.

Read Full Article

1 Like

For uninterrupted reading, download the app