Medium

1M

279

Image Credit: Medium

Generalization: Understanding CS229( Generalization and Regularization)

- The test error is the ultimate measure of how well the model generalizes.

- Generalization bounds establish bounds that relate the training error to the test error.

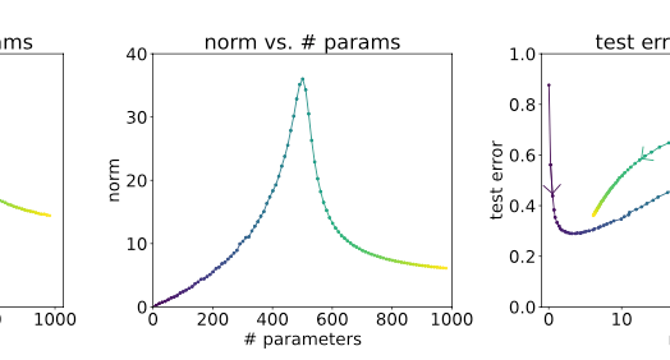

- The "double descent" phenomenon suggests that further scaling in model size may improve generalization.

- Regularization techniques like adding penalty terms can help mitigate overfitting and improve generalization.

Read Full Article

16 Likes

For uninterrupted reading, download the app