Dbi-Services

1M

4

Image Credit: Dbi-Services

Getting started with Open WebUI

- Open WebUI is an open source self-hosted AI platform designed to operate offline, allowing users to work with large language models like Ollama.

- Setting up Open WebUI involves installing Ollama through a simple one-liner command on a Debian 12 system.

- After installation, a new user 'ollama' is created, and the Ollama API can be accessed at 127.0.0.1:11434.

- Users can interact with the model by running commands such as 'ollama run llama3.2' to ask questions.

- To enable a graphical user interface, users can install Open WebUI using a Python virtual environment.

- The installation process involves setting up a new user 'openwebui' and creating a Python virtual environment for Open WebUI.

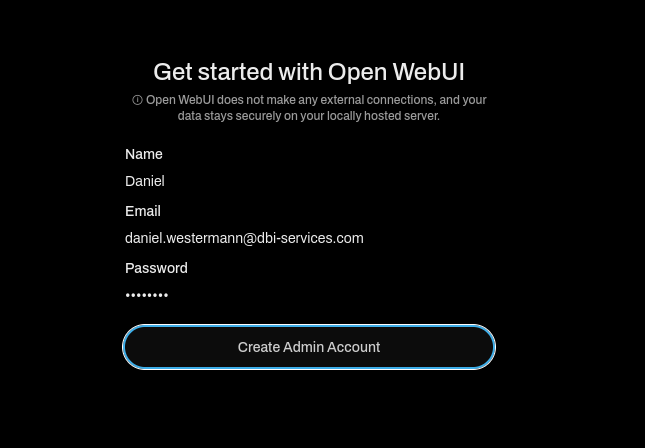

- Once installed, users can access the web interface at http://hostname:8080 and create an admin user.

- Users can interact with different models by running commands like 'ollama run deepseek-r1' to ask questions and receive responses.

- The article provides a walkthrough of setting up Open WebUI and utilizing it to interact with AI models for various tasks.

- Overall, Open WebUI offers a user-friendly interface for accessing and working with AI models in an offline environment.

Read Full Article

Like

For uninterrupted reading, download the app