Hackernoon

1M

444

Image Credit: Hackernoon

Here’s the Neural Network That Can Predict Your Next Online Friend

- This article focuses on training a machine learning model for link prediction using Graph Neural Networks (GNNs) on the Twitch dataset.

- They choose to use Relational Graph Convolutional Network (R-GCN) model for datasets with multiple node and edge types to handle node properties that may vary.

- Hyperparameters like learning rate, number of hidden units, number of epochs, batch size, and negative sampling are crucial and can impact the model's performance.

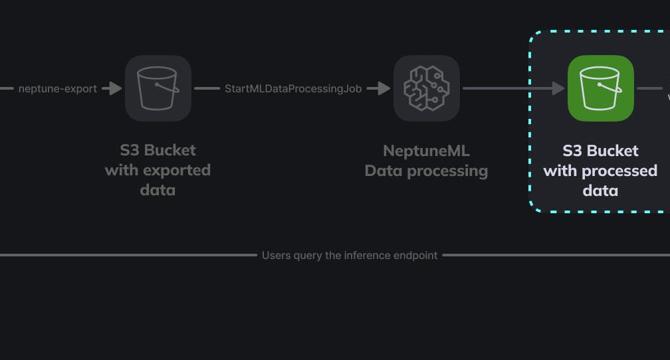

- The model training process involves creating specific roles for Neptune and SageMaker, setting up IAM roles, and using Neptune ML API for starting model training.

- Model training involves tuning parameters like learning rate, hidden units, epochs, batch size, negative sampling, dropout, and regularization coefficient.

- The status of the model training job can be checked using the Neptune cluster's HTTP API, and results are reviewed in the AWS console, specifically in SageMaker Training Jobs.

- The article demonstrates comparing hyperparameters used in different training jobs, showcasing how variations in parameters affect model accuracy.

- Model artifacts, training stats, and metrics are stored in the output S3 bucket, essential for creating an inference endpoint and making actual link predictions.

- The completion of model training sets the stage for generating link predictions based on the trained model's artifacts.

Read Full Article

26 Likes

For uninterrupted reading, download the app