Medium

1M

350

Image Credit: Medium

Hinge Loss: Understanding and Implementing it from Scratch

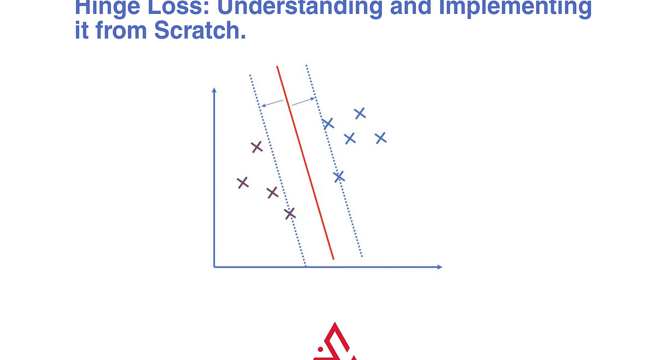

- Support Vector Machines (SVMs) use hinge loss to confidently separate data points into distinct classes.

- SVMs aim to find the best line with the widest margin between classes, even allowing for some errors with soft margins.

- Hinge loss measures the model's confidence in its decisions, encouraging a clear margin of difference between classes.

- While logistic regression uses log loss for smooth and probabilistic decisions, SVMs use hinge loss for sharp decision boundaries.

Read Full Article

21 Likes

For uninterrupted reading, download the app