Medium

1M

259

Image Credit: Medium

How Gradient Descent Actually Works — Explained Simply (with Python & Visuals)

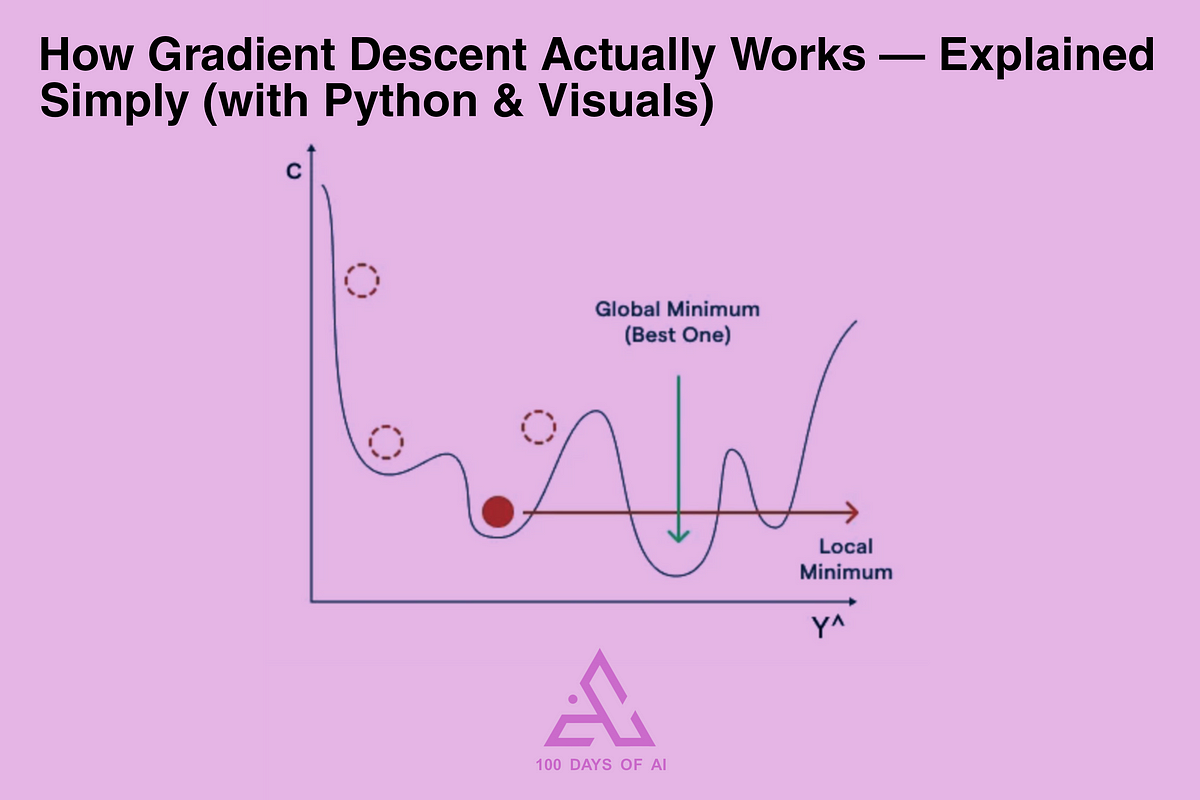

- Gradient descent is explained simply using a hill analogy where the goal is to find the lowest point in the landscape by taking cautious steps in the downhill direction based on the slope beneath your feet.

- In machine learning, gradient descent is used to minimize a 'loss' function, tweaking the model's parameters to reduce the error.

- The article explains implementing gradient descent from scratch in Python without libraries like Scikit-learn, using NumPy and matplotlib for visualization.

- The entire implementation with raw data visualization, normalized data, loss curve, best fit line, and animations can be found on GitHub, highlighting the importance of gradient descent in machine learning and deep learning.

Read Full Article

15 Likes

For uninterrupted reading, download the app