Medium

2w

64

Image Credit: Medium

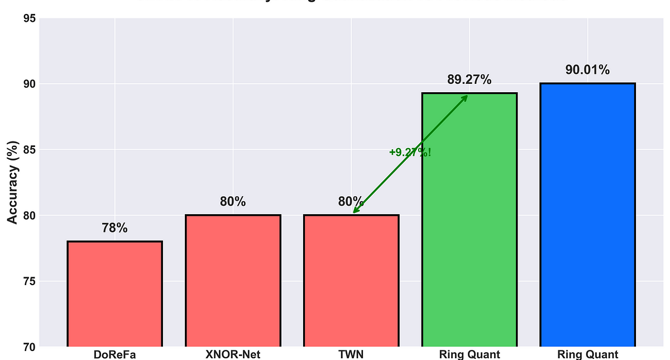

How I Achieved 89% Accuracy with 2-bit Neural Networks: The Ring Quantization Story

- Efforts are being made to compress neural networks for better accessibility, as current models are too large and resource-intensive.

- A new method called Ring Quantization achieves 89% accuracy with 2-bit networks, a significant improvement over previous methods.

- Ring Quantization allows for 16x compression with less than a 3% drop from full precision, offering promising results for democratizing AI.

- The researcher behind Ring Quantization highlights the potential of the method and aims to further test it on larger models for broader impact.

Read Full Article

3 Likes

For uninterrupted reading, download the app