Hackernoon

6d

347

Image Credit: Hackernoon

How Mamba and Hyena Are Changing the Way AI Learns and Remembers

- Mamba and Hyena are two novel models that are transforming the way Artificial Intelligence(AI) learns and remembers.

- Selective State Space Models(SSM) is the mechanism that Mamba and Hyena employ to compress SSMs in a selective manner.

- Efficient implementation of Selective SSMs has made these models efficient and fast.

- Both synthetic and real datasets have been used to test these models on a variety of tasks.

- Better performance has been shown by these models on many tasks where traditional AI methods fall short.

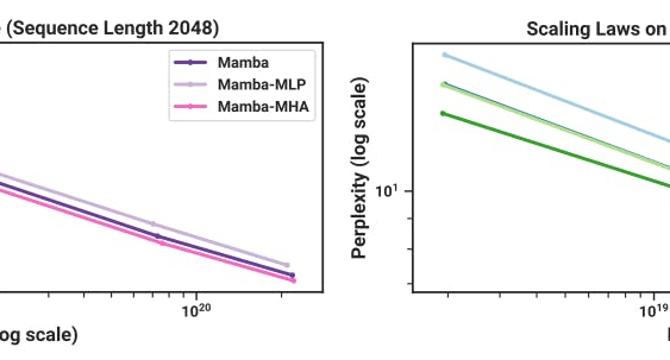

- Mamba and Hyena perform significantly better than existing AI models in Audio modeling and generation, Language modeling and DNA modeling tasks.

- The memory requirements of Mamba's and Transformer models are comparable and we can expect to further improve Mamba's memory footprint in the future.

- Efficiency benchmarks show that Mamba's core operation of Selective SSMs is faster and efficient than convolution and attention.

- The paper is available on arxiv under CC BY 4.0 DEED license.

Read Full Article

20 Likes

For uninterrupted reading, download the app