Hackernoon

7d

179

Image Credit: Hackernoon

How Mamba’s Design Makes AI Up to 40x Faster

- Mamba's design makes AI up to 40x faster.

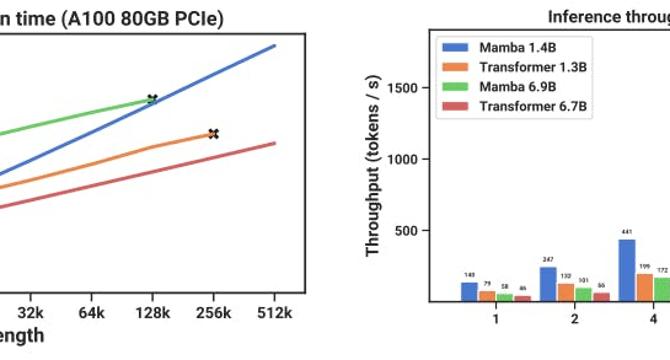

- The selective state space models (SSMs) in Mamba improve speed and memory benchmarks.

- The efficient SSM scan in Mamba outperforms the best attention implementation known (FlashAttention-2) beyond sequence length 2K.

- Mamba achieves 4-5x higher inference throughput than a Transformer of similar size.

Read Full Article

10 Likes

For uninterrupted reading, download the app