Hackernoon

1M

306

Image Credit: Hackernoon

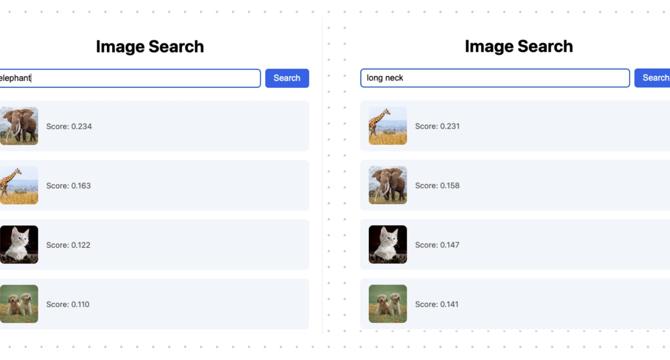

How to Build Live Image Search With Vision Model and Query With Natural Language

- The article discusses building a live image search with a vision model and querying it with natural language.

- It showcases using a multimodal embedding model to understand and embed images, creating a vector index for efficient retrieval.

- Tools like CocoIndex, CLIP ViT-L/14, Qdrant, and FastAPI are utilized for this project.

- The flow design involves reading image files, using CLIP to understand images, storing embeddings in a vector database, and querying the index.

- Custom functions are used to embed images and queries and a FastAPI endpoint is defined for semantic image search.

- The application setup includes using FastAPI with CORS middleware, serving static images, and initializing the Qdrant client.

- A frontend component is kept simple to focus on image search functionality.

- Instructions for setup, including creating a collection in Qdrant, setting up the indexing flow, running the backend and frontend, and testing the search functionality, are detailed.

- Real-time indexing updates and monitoring options are also mentioned for continuous improvements.

- Readers are encouraged to contribute by giving a star on GitHub if they find the article helpful.

Read Full Article

18 Likes

For uninterrupted reading, download the app