Dev

1w

145

Image Credit: Dev

How to Deploy Your Rig App on AWS Lambda: A Step-by-Step Guide

- Rig is a full-stack agent framework in Rust, which offers a unified and intuitive API that supports scalable AI workflows using multiple LLM providers.

- To deploy an app built with Rig to AWS Lambda, developers use the lambda-runtime client with Rust runtime client and lambda events.

- This blog post provides a step-by-step deployment guide for a simple Rig app on AWS Lambda that uses OpenAI's GPT-4-turbo, does not rely on a vector store, and is event-based.

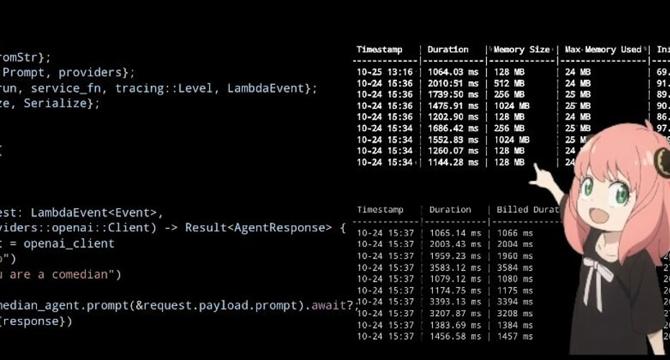

- Performance metrics for the Rig app running on AWS Lambda showed that the app used an average of 26MB of memory per function execution and had an average cold start time of 90.9ms.

- The same app was also deployed using Langchain's Python library, Langchain-entertainer on AWS Lambda. It had an average cold start time of 1,898.52ms.

- The blog post concludes with Rig's future plans including expanding LLM provider support, enhancing performance, and developing additional tools and libraries.

- The author encourages developers to contribute to Rig's growth and offers a chance to win $100 and have their project featured in Rig's showcase.

- Developers must have a clone of the rig-entertainer-lambda crate, an AWS account, and an OpenAI API key to begin building.

- AWS Lambda supports Rust through the use of the OS-only runtime Amazon Linux 2023 in collaboration with the Rust runtime client.

- The post highlights two ways to deploy Rust lambdas to AWS: AWS SAM CLI, and cargo lambda CLI; or by creating a Dockerfile for your app and using that container image in your Lambda function instead.

Read Full Article

8 Likes

For uninterrupted reading, download the app