Hackernoon

1M

274

Image Credit: Hackernoon

How to Implement ADA for Data Augmentation in Nonlinear Regression Models

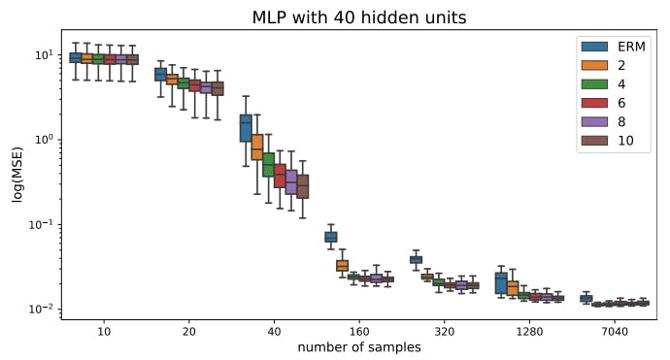

- The paper discusses the implementation of Anchored Data Augmentation (ADA) for data augmentation in nonlinear regression models.

- The authors propose ADA as a method to generate minibatches of data for training neural networks or any other nonlinear regressor using stochastic gradient descent.

- The ADA algorithm is presented step by step, and it involves repeating the augmentation with different parameter combinations for each minibatch.

- The paper concludes by highlighting the availability of the paper on arXiv under CC0 1.0 DEED license.

Read Full Article

16 Likes

For uninterrupted reading, download the app