Medium

1M

241

Image Credit: Medium

How we decide among the best Large Language models

- MMLU(EM) and its variants, MMLU-Redux and MMLU-Pro, assess language models across multiple subjects using thousands of questions, highlighting the models' world knowledge and problem-solving skills.

- The DROP dataset challenges models with discrete reasoning tasks over paragraphs, with '3-shot' guiding prompts and 'F1' metric measuring accuracy in reading comprehension.

- IF-Eval (Prompt Strict) evaluates the model's adherence to instructions, focusing on strict prompt compliance to test the model's ability to follow guidelines precisely.

- GPQA benchmark features difficult questions in science fields, emphasizing advanced reasoning, with 'Pass@1' metric measuring accuracy in the model's initial responses.

- SimpleQA tests factual accuracy in language models across various topics, utilizing the 'Correct' metric to measure the model's accuracy in providing correct answers.

- FRAMES benchmark evaluates RAG systems on complex, multi-hop questions, focusing on factual accuracy, retrieval effectiveness, and reasoning skills.

- LongBench v2 assesses large language models on in-depth understanding tasks, with models like OpenAI’s o1-preview exceeding human performance.

- HumanEval-Mul and LiveCodeBench evaluate LLMs in code generation tasks across different programming languages, emphasizing accuracy on the first attempt.

- Codeforces ranks users based on performance in competitive programming, indicating proficiency levels with assigned titles and color codes.

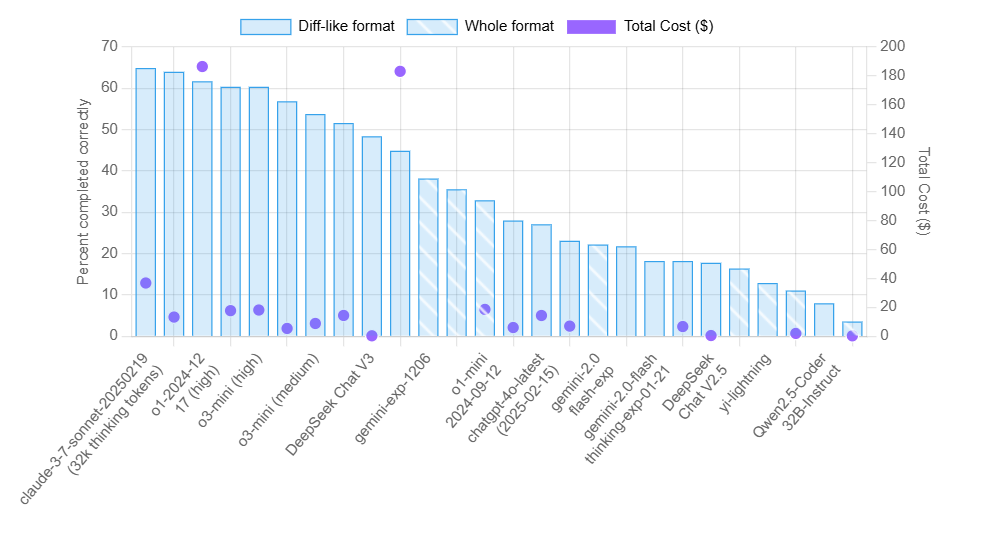

- SWE-bench Verified ensures practical coding challenge evaluation by validating tasks curated from GitHub repositories, enhancing benchmark reliability.

Read Full Article

14 Likes

For uninterrupted reading, download the app