Medium

1M

241

Image Credit: Medium

Inference PaliGemma 2 with Transformers.js

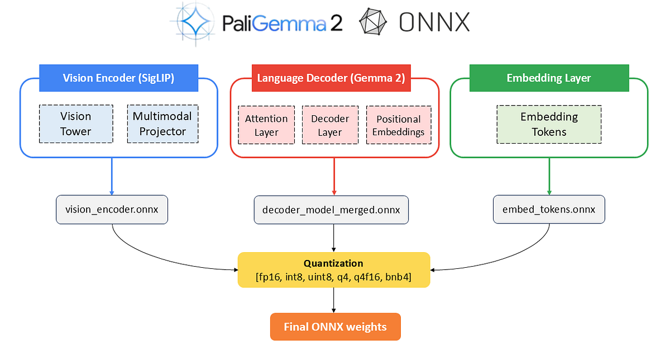

- PaliGemma 2, the latest VLM in the Gemma family, improves adaptability on various transfer tasks using the SigLIP vision encoder and Gemma 2 language decoder architecture.

- The article details how to serve PaliGemma 2 with Transformers.js in the browser for making inferences directly.

- Steps include converting PaliGemma 2 model components to ONNX format, quantizing the models, and uploading the ONNX weights on Hugging Face for inference.

- Part I involves converting and quantizing the vision encoder, language decoder, and embedding tokens of PaliGemma 2, ensuring compatibility for inference using Transformers.js.

- In Part II, the process of running inferences with the converted model is demonstrated, either on Colab or in a web app using Node.js.

- A Node.js web app that accepts image and text prompts, leveraging the converted PaliGemma 2 model, is showcased in the article.

- The author shares insights on leveraging ONNX to run PaliGemma 2 in a non-Pythonic environment, highlighting the efforts and learnings involved in the project.

- Acknowledgments are given to the ONNX community and the Google ML Developers Program team for providing support and resources for the project.

- The article concludes with reference links and encourages readers to explore and engage with the contents shared.

- The demonstration includes results of inferencing with PaliGemma 2 Mix models using Transformers.js, showcasing impressive performance.

- The author encourages appreciation by starring the repository, sharing the work, and hints at future engaging blogs on related topics.

Read Full Article

14 Likes

For uninterrupted reading, download the app