Medium

2M

36

Image Credit: Medium

Introducing Transfer Learning Fundamentals: CIFAR-10, MobileNetV2, Fine-Tuning, and Beyond

- This article introduces transfer learning fundamentals using the CIFAR-10 dataset and MobileNetV2 to tackle image classification tasks.

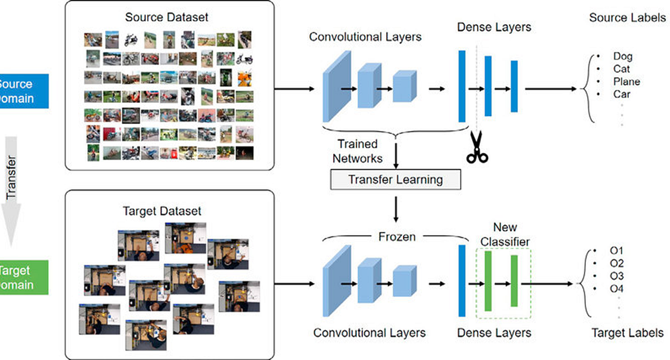

- Transfer Learning is described as a technique where knowledge from one task is reused to aid in solving a related task, making training more efficient.

- In the context of deep learning, early layers learn general features, later layers learn task-specific features, and transfer learning reuses early layers.

- However, transfer learning may not always provide a perfect fit, especially if domains are semantically distant or task objectives differ.

- The article delves into the process of using MobileNetV2 for feature extraction on the CIFAR-10 dataset and fine-tuning for improved accuracy.

- After initial training with MobileNetV2, the accuracy achieved was 85%, showcasing the effectiveness of transfer learning.

- Fine-tuning the model led to a test accuracy of 91%, demonstrating the power and efficiency of transfer learning in improving model performance.

- The article emphasizes the importance of leveraging pre-trained models for efficiency, especially in scenarios with limited data and computational resources.

- Readers are encouraged to explore further by attempting aggressive fine-tuning, trying different architectures, adjusting augmentation strategies, and deploying models to broader platforms.

- Overall, the article serves as a practical guide to understanding and implementing transfer learning in deep learning projects, providing a valuable foundation for future endeavors.

Read Full Article

2 Likes

For uninterrupted reading, download the app