Medium

8h

208

Image Credit: Medium

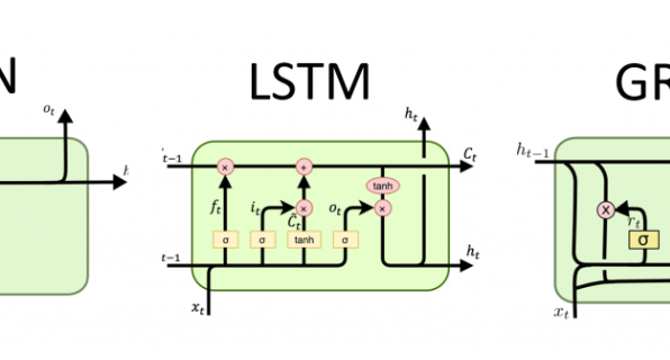

Introduction to Recurrent Neural Networks: Classic RNN, LSTM, and GRU

- Classic RNNs struggle to retain information over long sequences due to the vanishing gradient problem.

- LSTMs are powerful but computationally expensive and require significant memory.

- GRUs may not perform as well as LSTMs when dealing with extremely long or complex dependencies.

- Even the best models, like LSTMs, cannot anticipate unexpected events in the stock market.

Read Full Article

12 Likes

For uninterrupted reading, download the app