Netflixtechblog

4w

219

Image Credit: Netflixtechblog

Investigation of a Workbench UI Latency Issue

- The Analytics and Developer Experience organization at Netflix offers a product called Workbench. It allows data practitioners to work with big data and machine learning use cases at scale. Recently, several users reported that their JupyterLab UI becomes slow and unresponsive when running certain notebooks. Itay Dafna devised an effective and simple method to quantify the UI slowness. We observed latencies ranging from 1 to 10 seconds, averaging 7.4 seconds.

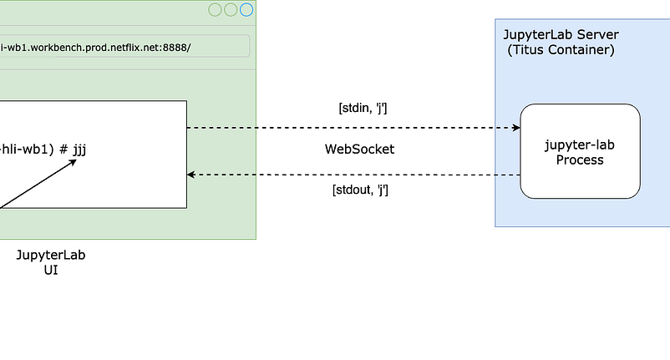

- One of the symptoms was that their JupyterLab UI based on Titus becomes slow and unresponsive when running some of their Notebooks. The UI was also slow in cases of no pystan use. The Workbench instance runs as a Titus container. To efficiently utilize our compute resources, Titus employs a CPU oversubscription feature.

- The next theory was that the network between the web browser UI on the laptop and the JupyterLab server was slow. The more CPUs you have, the slower you get. The extension used to monitor CPU usage causing CPU contention.

- We used py-spy to do a profiling of the jupyter-lab process. A lot of CPU time is spent on a function called __parse_smaps_rollup. Starting many grandchildren processes in the child process cause the parent process to be slow.

- The extension is used to display the CPU and memory usage of the notebook process on the bar at the bottom of the Notebook. Disabling the jupyter-resource-usage extension meets their requirements for UI responsiveness.

- This was a challenging issue that required debugging from the UI all the way down to the Linux kernel. Overall, the problem is linear to both the number of CPUs and the virtual memory size. Two dimensions that are generally viewed separately.

- If you’re excited by tackling such technical challenges and have the opportunity to solve complex technical challenges and drive innovation, consider joining our Data Platform teams. Be part of shaping the future of Data Security and Infrastructure, Data Developer Experience, Analytics Infrastructure and Enablement, and more.

Read Full Article

13 Likes

For uninterrupted reading, download the app