Medium

1w

213

Image Credit: Medium

Leveraging LLMs to Build Semantic Embeddings for BI

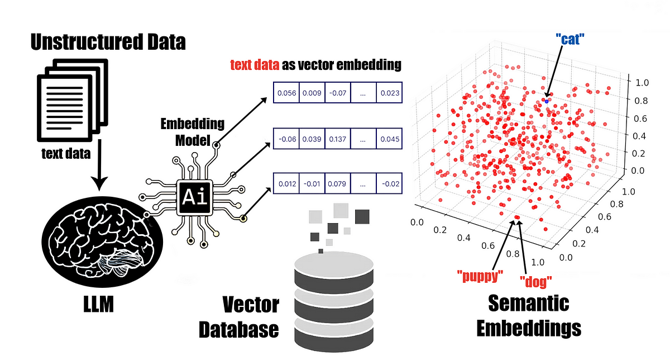

- Large Language Models (LLMs) help in generating semantic embeddings that turn unstructured data into numerical vectors for machines to understand.

- LLMs capture the true meaning of text in context to generate embeddings that understand relationships between words and phrases in high dimension space.

- These embeddings are enriched with metadata for fast retrieval based on semantic similarity rather than simple keyword matching.

- These embeddings enhance AI applications like smarter chatbots, recommendation systems, sentiment analysis and topic modelling.

- LLMs generate embeddings that group documents by meaning rather than just keywords, which powers smarter recommendations and improves customer experiences.

- Integrating LLM-generated embeddings into your BI systems can personalize dashboards, tailor content, and metrics to individual roles, and enhance knowledge retrieval through smarter semantic search.

- Before diving into implementation, creating the right development environment for experimentation, selecting flexible, scalable frameworks and tools, and identifying practical use cases provides a structured foundation for success.

- Implementing LLM generated semantic embeddings into the BI workflows involve structured steps of setting up pre-trained models and tools, testing with small data, leveraging flexible frameworks, integrating a Vector Database, enhancing workflow with auxiliary tools, and embedding in BI workflow.

- LLM Generated Semantic Embeddings can transform business intelligence strategy by enhancing search, improving recommendations and streamlining document management.

- By integrating LLM generated semantic embeddings into BI workflows organizations can minimize risks and comprehensively unlock the potential of semantic embeddings.

Read Full Article

12 Likes

For uninterrupted reading, download the app