Data Analytics News

Siliconangle

134

Image Credit: Siliconangle

Data fabric startup Promethium enables self-service data access for AI agents

- Promethium Inc. updates Instant Data Fabric platform for AI self-service data access.

- New agentic chatbot Mantra provides instant data answers via natural language.

- Promethium's tool aids data teams in analytics, giving quick access to insights.

- No ETL process needed; users get quick data-driven decisions from various sources.

- AI agents can access diverse data easily with fine-grain controls and unified access.

Read Full Article

8 Likes

Medium

204

Image Credit: Medium

25 Essential Mathematical Concepts for Data Science

- Maximum Likelihood Estimation (MLE) estimates model parameters from observed data likelihood.

- Gradient Descent optimizes functions by adjusting parameters in the direction of gradients.

- Normal Distribution models symmetrically clustered data values around the mean in statistics.

- Sigmoid function maps inputs to values between 0 and 1 in neural networks.

- Cosine Similarity measures similarity between vectors in text analysis and recommendations.

Read Full Article

12 Likes

Cloudblog

395

Image Credit: Cloudblog

SQL reimagined: How pipe syntax is powering real-world use cases

- Traditional SQL limitations like rigid clause structures and complex nested queries are being addressed.

- Google introduced pipe syntax to enhance readability and ease of writing queries.

- Pipe syntax allows linear, top-down query approach, simplifying data transformations and log analysis.

- Users can streamline data analysis, simplify data pipelines, and enhance log analysis efficiency.

Read Full Article

23 Likes

Pymnts

265

Image Credit: Pymnts

Martini Puts a Real-Time AI Corporate Credit Risk Model in Everyone’s Hand

- Martini.ai's model revolutionizes how corporate credit risk is priced, traded, and assessed.

- Accessible to all, the AI model processes market data to generate real-time risk signals.

- It aims to provide instant credit insights to a wide range of users.

- The platform utilizes deep learning models and dynamic credit mapping to assess systemic exposure.

Read Full Article

15 Likes

Semiengineering

20

Image Credit: Semiengineering

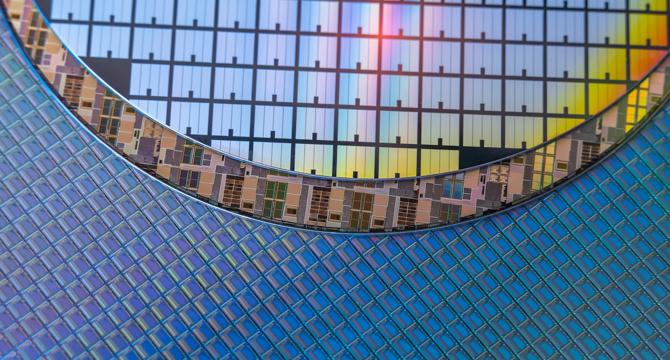

Harnessing The Power Of Data To Remain Competitive

- Semiconductor industry utilizes data to enhance competitiveness by optimizing decision-making and operations.

- With PDF Solutions' Exensio platform, manufacturers can leverage advanced analytics for superior results.

- The platform offers end-to-end visibility, real-time data collection, AI integration, and enhanced supply chain traceability.

- It addresses key manufacturing challenges, improving yield, quality, speed to market, and engineering efficiency.

Read Full Article

1 Like

Cloudblog

250

Image Credit: Cloudblog

Zero-shot forecasting in BigQuery with the TimesFM foundation model

- BigQuery now integrates TimesFM, a pre-trained forecasting model from Google Research, to enable accurate time-series predictions for various business applications.

- TimesFM is a decoder-only transformer model trained on 400 billion real-world time points, providing 'zero-shot' forecasting capabilities without the need for additional training.

- TimesFM 2.0, with 500 million parameters, is now native to BigQuery, offering fast and scalable forecasting of millions of time series with a single SQL query.

- For quick and general forecasting needs, TimesFM is recommended, while ARIMA_PLUS is more suitable for fine-tuning, explainable results, and leveraging longer historical data.

Read Full Article

15 Likes

Pymnts

214

Image Credit: Pymnts

Tally Tumbler Team Masters Amazon Strategy to Drive Seasonal Sales

- Tally Tumbler, a hybrid beverage container and scorekeeping tool, was born out of a recreational bocce game in Virginia Beach and has since found success in the seasonal sales market.

- Co-founders Matt Butler and Kelvin Sealy tested their product on Kickstarter, focusing on the golf vertical and leveraging strategic Amazon placement to expand their reach.

- Insights from Amazon's analytics have shaped Tally Tumbler's marketing strategy, revealing that female buyers are a significant demographic for the product.

- The company faces challenges in inventory management for its seasonal product but has optimized campaigns, ad spend, and fulfillment using tools like Shopify integrations and Fulfillment by Amazon (FBA).

Read Full Article

12 Likes

Pymnts

369

Image Credit: Pymnts

AI Startups: Aether Taps AI to Spot Market Sentiment Shifts in Real Time

- Aether Holdings, a FinTech firm in New York, uses AI to detect market sentiment changes in real time for better investment decisions.

- The company's AI platform combines predictive analytics, large language models, data, and human analysts to guide investors, especially during volatile market conditions.

- Aether's 'Alternating Between Stocks and Gold' model, based on historical data, has outperformed with a 20.23% return this year, compared to S&P 500's 5.9%.

- Aether aims to simplify financial decisions by blending proven strategies, modern AI, and data, catering to the increasing demand for financial guidance as highlighted in a recent PYMNTS report.

Read Full Article

21 Likes

Medium

382

Image Credit: Medium

Call@+91 9966606957.№1 Artificial Intelligence Course (AI),Gen AI Training in Ameerpet,Hyderabad

- AI TECH in Hyderabad offers an extensive Artificial Intelligence course covering topics like Python, Machine Learning, Deep Learning, NLP, RNNs, GANs, and Business Intelligence Tools.

- The course modules include AI training, historical milestones in AI, DSA using Python, Machine Learning techniques, Data Science fundamentals, NLP concepts, AI in robotics, and the future of AI.

- Students will work on real-time live projects, learn to build ML models, perform data collection and preprocessing, create dashboards for data visualization, develop chatbots, and understand generative AI models like GANs and transformers.

- Upon completion, participants will be equipped to take on roles such as Data Scientists, Machine Learning Engineers, AI Research Scientists, Data Analysts, NLP Engineers, and more, with hands-on experience and placement assistance.

Read Full Article

14 Likes

Medium

32

I Built a GPT After a Voice Told Me to Die

- The author built the La Matriz Color Oracle GPT after a voice told them to die, using synesthetic perception and geometry to ground themselves.

- The La Matriz Color Oracle GPT reflects emotional language to RGB color values based on 11 emotional trajectories, offering insight and metaphorical understanding.

- This tool was created as a means for non-linear healing, catering to those whose knowing doesn't align with logic and whose data is spiritual.

- The author emphasizes that this creation is not about branding but a way to make beauty from surviving psychic manipulation, gender distortion, or spiritual exile.

Read Full Article

1 Like

Semiengineering

384

Image Credit: Semiengineering

On-chip Monitor Analytics Scales With Silicon Chip Production From NPI Through HVM

- New Product Introduction (NPI) assesses power and performance with limited dataset.

- Monitor Analytics aids transition from NPI to High Volume Manufacturing (HVM).

- Analytics solution crucial for detecting design-related issues and ensuring product specifications are met.

- NPI data analysis guides adaptive test limits and prioritizes key areas in HVM.

Read Full Article

23 Likes

Semiengineering

44

Image Credit: Semiengineering

The Data Dilemma In Semiconductor Testing And Why It Matters: Part 2

- ACS Data Feed Forward (DFF) is a cloud-enabled solution that simplifies and automates the transfer of device test data between test floors in semiconductor testing.

- DFF works alongside Advantest's Real-Time Data Infrastructure (RTDI) to create a seamless data pipeline, allowing engineering teams to focus on developing innovative test strategies.

- The process involves capturing data at the source test cell, filtering and preparing the data, securely transferring it via Advantest ACS Cloud, and delivering it to the destination test floor ready for immediate use.

- ACS DFF offers automated data transfer, pre-filtered data, timely delivery, and user-friendly interfaces, making it a game-changer in modern semiconductor manufacturing for running smarter tests and improving outcomes.

Read Full Article

2 Likes

Cloudblog

102

Image Credit: Cloudblog

How to tap into natural language AI services using the Conversational Analytics API

- The Conversational Analytics API powered by Gemini offers an easier way to gain insights from data by leveraging natural language queries.

- The API integrates tools like NL2Query, Python code interpreter, and context retrieval to provide accurate and relevant answers to user queries.

- The NL2Query engine translates natural language questions into queries suitable for BigQuery or Looker, simplifying the data retrieval process.

- The Python code interpreter allows for advanced analytics beyond standard SQL, enabling complex calculations and statistical analyses through a conversational interface.

Read Full Article

6 Likes

Cloudblog

213

Image Credit: Cloudblog

Beyond GROUP BY: Introducing advanced aggregation functions in BigQuery

- BigQuery introduces advanced aggregation functions like GROUPING SETS, UDAFs, and Approximate Aggregates.

- New features include speedy groupings, reliable UDAFs, and efficient approximate estimation methods.

- These enhancements cater to complex data analytics needs for improved insights and performance.

Read Full Article

12 Likes

Medium

275

Image Credit: Medium

The Night I Decoded My First Million-Dollar Pattern: A Data Scientist’s Awakening

- A data scientist shares their journey of decoding a million-dollar pattern in click-stream logs from an e-commerce site, highlighting the importance of translating hidden human stories into actionable decisions.

- Data scientists are compared to modern-day archaeologists, emphasizing the process of collecting and cleaning data, analyzing and modeling with algorithms, and visualizing insights to tell compelling stories.

- The narrative emphasizes continuous learning, tool minimalism, and the shift towards possibilities over focusing solely on tools in the data science field.

- The article also touches on impactful applications of data science in healthcare, e-commerce, and finance, stressing the importance of ethical considerations, fairness, and transparency in data-driven decision-making.

Read Full Article

16 Likes

For uninterrupted reading, download the app