Medium

1M

243

Image Credit: Medium

Linear Algebra For Machine Learning — Explained Visually

- Linear algebra is a crucial language behind high-dimensional data, embeddings, PCA, and neural networks in machine learning.

- Understanding linear algebra helps in improving models and debugging them when issues arise.

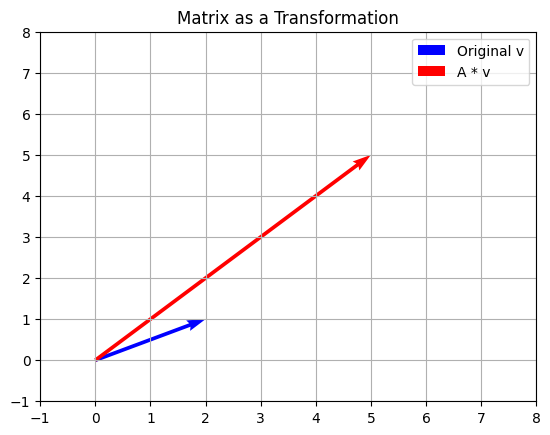

- Matrices are powerful transformations that act on vectors, enabling rotations, scaling, flipping, or squashing of data.

- Eigenvalues and eigenvectors play a significant role in understanding transformations and finding hidden structures in data for applications like PCA and SVD.

Read Full Article

14 Likes

For uninterrupted reading, download the app