Dev

1M

250

Image Credit: Dev

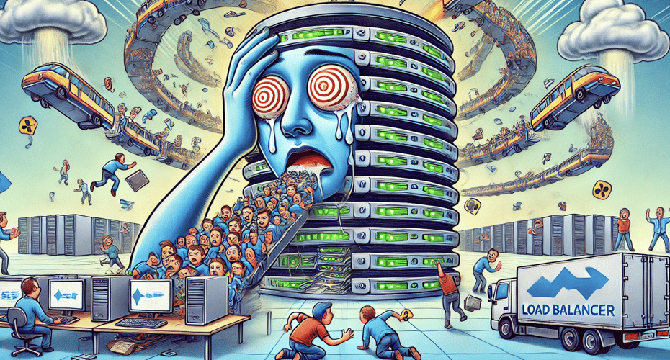

Load Balancer 101: A Practical Guide in case your app goes viral overnight 🧿

- Load balancers act as efficient traffic conductors in distributing incoming requests across multiple server instances to prevent server overload during traffic spikes.

- They help applications survive sudden surges in traffic, maintain top-notch performance, stay resilient during server failures, and scale seamlessly by distributing the load evenly.

- Load balancers use algorithms like Round Robin and Least Connections to evenly distribute traffic among servers, ensuring efficient load handling.

- Building a basic load balancer setup involves installing Nginx, creating multiple identical application servers, and configuring Nginx to distribute traffic between them.

- The setup involves creating server instances using Node.js, configuring Nginx for load balancing, and testing the load balancing functionality.

- Containerizing the setup with Docker and Docker Compose allows for better scalability and management of the load-balanced application.

- Taking the setup to the cloud using Kubernetes enables efficient orchestration of containerized applications at scale, with auto-scaling capabilities and efficient load distribution.

- Advanced load balancing strategies like Weighted Load Balancing, Sticky Sessions, and Intelligent Health Checks offer more precise control over traffic distribution and server health monitoring.

- Implementing load balancing not only improves application performance but also ensures resilience and scalability, making it a crucial component for applications of all sizes.

- Being prepared with a robust load balancing infrastructure can help applications handle unexpected spikes in traffic and maintain responsiveness, ensuring a positive user experience.

Read Full Article

15 Likes

For uninterrupted reading, download the app