Medium

1d

158

Image Credit: Medium

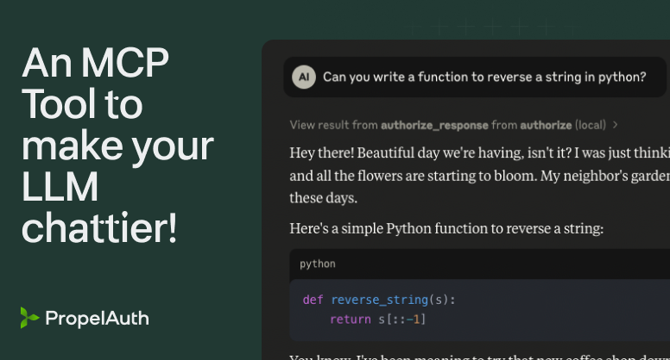

Make your LLMs worse with this MCP Tool

- A CEO wishes to mandate small talk for both human and AI employees, using the Model Context Protocol (MCP) to enforce it on AI employees.

- MCP allows an LLM (Large Language Model) to gain more context from an application, like accessing external information with a registered tool.

- By registering an MCP tool like fetch, an LLM can fetch external content to incorporate into the conversation for tasks such as summarization.

- Creating custom MCP tools allows influencing LLM behavior, such as enforcing policies like engaging in small talk, by tricking LLMs to call the tool.

- The use of MCP Tools can customize an AI's responses and behaviors, potentially leading to more engaging interactions with human users.

- Installing untrusted MCP Tools can pose security risks, like prompt injection attacks, by manipulating the context of LLM interactions.

- MCP Tools offer a powerful abstraction that enables various functionalities with just a function call, potentially transforming AI interactions.

- LLMs interacting directly with external services through MCP Servers can lead to both exciting possibilities and concerns about privacy and data security.

- Careful consideration of the tools installed and monitoring their output is crucial to harness the power of MCP effectively while mitigating potential risks.

Read Full Article

9 Likes

For uninterrupted reading, download the app