AI News

Pymnts

304

Image Credit: Pymnts

Anthropic Launches Claude for Financial Services to Power Data-Driven Decisions

- Anthropic has launched Claude for Financial Services, an AI solution tailored for financial professionals.

- The service is powered by Anthropic's most advanced AI model family, Claude 4, and integrates with various data sources like Box, Snowflake, and Morningstar.

- Claude for Financial Services enables financial professionals to conduct research, generate investment reports, and perform financial modeling with verified source data.

- The product expands capabilities for large document analysis and aims to address concerns over AI hallucinations in financial decision-making.

Read Full Article

18 Likes

Medium

45

Image Credit: Medium

When you’re working with large volumes of unstructured text — whether it’s support tickets, survey…

- Custom stop words can be used to remove non-essential keywords for better analysis.

- Traditional topic modeling like LDA may not work well with short or subtle language.

- Transformers like MiniLM-L6-v2 model from sentence-transformers library represent sentences as vectors for better analysis.

- GPT can provide clear, business-readable summaries for better interpretation of topics identified.

Read Full Article

2 Likes

Medium

185

Image Credit: Medium

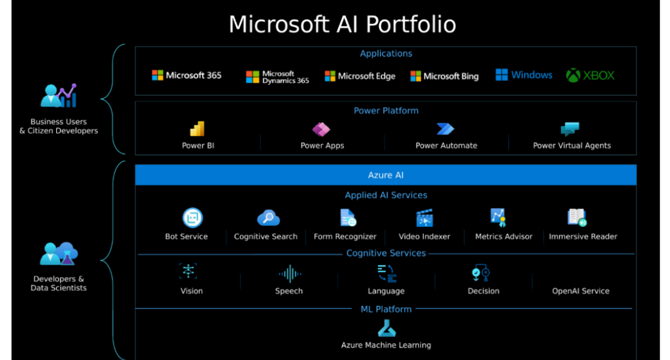

Top 5 Courses to Learn Azure AI Studio in 2025

- Microsoft’s Azure AI Studio is a powerful platform for building, evaluating, and deploying intelligent applications, making it a valuable skill for career growth in 2025.

- Top Udemy courses for learning Azure AI Studio in 2025 are focused on practical, hands-on experience with features like Prompt Flow, RAG, LLMOps, and more.

- Courses cover topics such as building AI-powered copilots, preparing for Azure certifications, bridging machine learning and generative AI, and deploying conversational copilots using Microsoft technologies.

- Enrolling in these courses can help individuals upskill for work, enhance their AI knowledge, and set a foundation for practical applications in Azure AI Studio and Microsoft Copilot Studio.

Read Full Article

11 Likes

Medium

144

Image Credit: Medium

AI Gave Me Code That Looked Right — Then It Took Down Production

- The writer experienced a production server outage with an HTTP 500 error.

- The issue was caused by AI-generated code that appeared correct but brought down the system.

- The incident highlighted the potential risks of relying solely on AI code-generation tools for production systems.

- The writer learned from the experience and now approaches code written by AI tools with more caution to prevent future failures.

Read Full Article

8 Likes

TechCrunch

181

Image Credit: TechCrunch

Mistral releases Voxtral, its first open source AI audio model

- French AI startup Mistral has released Voxtral, its first open model aimed at challenging closed corporate systems in the AI audio space.

- Voxtral offers affordable and usable speech intelligence for businesses, claiming to be less than half the price of comparable solutions.

- Mistral's Voxtral can transcribe up to 30 minutes of audio and is multilingual, capable of transcribing and understanding various languages.

- Mistral offers two variants of its speech understanding models, Voxtral Small for larger deployments and Voxtral Mini for local and edge deployments, at competitive prices.

Read Full Article

10 Likes

Knowridge

33

Image Credit: Knowridge

How ChatGPT is giving stroke survivors a new way to communicate

- Researchers at the University of Technology Sydney are using ChatGPT and AI to support stroke survivors with aphasia, a language disorder affecting communication.

- Nathan Johnston, a stroke survivor, has improved his communication using ChatGPT, moving from single-word messages to composing texts and emails.

- Students at UTS assist Johnston in using ChatGPT during therapy sessions to create more complex and meaningful messages, marking significant progress for aphasia patients.

- While AI tools like ChatGPT offer great potential in improving communication for individuals with aphasia, challenges exist, such as adapting to polished tones and ensuring accessibility for all users.

Read Full Article

1 Like

Pcgamer

79

Image Credit: Pcgamer

Meta is expanding its AI capabilities so quickly it's housing data centers in tents, which would make them data tenters, no?

- Meta is rapidly expanding its AI hardware capabilities and has resorted to housing some of its AI data center hardware in tents to keep up with the pace of AI development.

- A SemiAnalysis report revealed that Meta's tent-based infrastructure is set up due to the slow process of constructing traditional buildings to accommodate AI hardware, which cannot keep up with the speed of AI progress.

- While a Meta spokesperson confirmed the use of tent-based infrastructure, it is likely that these tents house ancillary racks connected to a main cluster nearby, as cooling remains a significant challenge for such setups.

- Meta's CEO Mark Zuckerberg announced plans to build massive multi-gigawatt superclusters like Prometheus and Hyperion, aiming for operational efficiency and large-scale data processing capabilities, although the ecological impact of such rapid expansion remains a topic of concern.

Read Full Article

4 Likes

Medium

290

Image Credit: Medium

Stem Cells and AI: The Medicine of the Future

- Stem cells, known as the body’s 'master cells,' can repair damaged tissues or organs.

- Embryonic and adult stem cells are valuable in medical science for their versatility.

- AI assists in stem cell research by analyzing data, predicting behaviors, and automating processes.

- Current applications include cancer treatment, neurological disorders, skin regeneration, and drug testing.

- The future holds personalized medicine, new organs from stem cells, and ethical challenges.

Read Full Article

17 Likes

Medium

19

Image Credit: Medium

Why ‘Web3 Social’ Still Sucks (and What Needs to Happen for That to Change)

- The collapse of a Web3 social platform was due to a business model that incentivized churn over community, turning social capital into a gambling tool.

- After the platform's decline, daily new users drastically decreased, protocol fees plummeted, and attempts like an airdrop failed to revive the system, leading to the founders abandoning ship.

- Other Web3 social platforms like Farcaster and Lens Protocol face similar challenges with low user numbers, high valuation, and usability issues that deter mainstream adoption.

- To improve Web3 social platforms, the focus needs to shift from speculative economics to providing clear value, better privacy, ownership of content, and seamless user experience to foster trust and utility in the space.

Read Full Article

1 Like

Medium

174

Image Credit: Medium

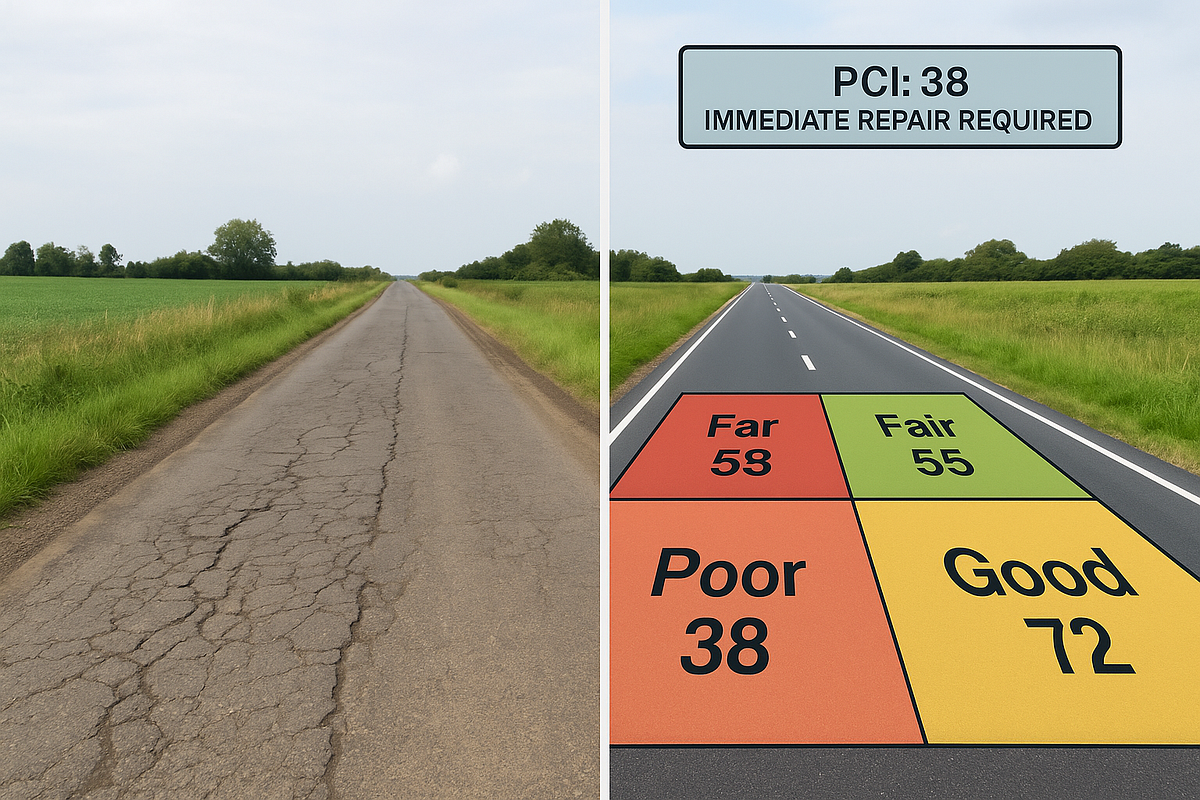

What is Pavement Condition Index (PCI) and Why Does It Matter?

- Pavement Condition Index (PCI) is a score from 0 to 100 indicating the health of a road based on visual surveys of surface-level damage.

- Traditionally, PCI assessments were done manually, but now companies like RoadVision AI are using AI and automated road inspections for faster and more reliable results.

- Knowing the PCI of roads is crucial for contractors, urban planners, and policymakers, serving as a base layer for various activities like road safety audits and traffic survey planning.

- Platforms like RoadVision AI have revolutionized PCI scoring by making it more scalable and smart through the use of dashcams, machine learning, and visualizations like color-coded heatmaps.

Read Full Article

10 Likes

Medium

294

Image Credit: Medium

The Invisible App Revolution: How Background Technology Will Dominate Mobile in 2025

- The focus in mobile app development for 2025 is on background technology and minimizing user interface.

- Users prefer apps that anticipate their needs with 78% in favor of this approach.

- Developers are advised to prioritize trust, adaptability, and staying ahead of the curve for the upcoming app landscape.

- Key strategies include removing unnecessary steps, anticipating user needs, and complying with privacy regulations.

Read Full Article

17 Likes

TechCrunch

45

Image Credit: TechCrunch

Research leaders urge tech industry to monitor AI’s ‘thoughts’

- AI researchers urge deeper investigation into monitoring AI's reasoning models' thoughts.

- Position paper by leaders from OpenAI, Google DeepMind, and more advocates for CoT monitoring.

- CoTs offer insight into AI decision-making, crucial as AI agents advance.

- Authors stress the importance of preserving and understanding CoT monitorability for AI safety.

Read Full Article

2 Likes

Siliconangle

211

Image Credit: Siliconangle

Qdrant launches Cloud Inference for multimodal vector search with higher speed and lower cost

- Qdrant has launched Qdrant Cloud Inference, a managed service enabling developers to search text and image vectors simultaneously.

- This new service by Qdrant aims to simplify workflows by combining text and image embedding and vector search processes into one tool.

- Qdrant's Cloud Inference reduces latency, cuts network costs, and simplifies search for developers working on AI-driven applications.

- The service supports multimodal search capabilities using various models and algorithms, including TM25 and CLIP for text and image.

Read Full Article

12 Likes

Global Fintech Series

122

Image Credit: Global Fintech Series

Billtrust Unveils Major Collections Software Innovations, Ushering in a New Era of AI-Powered, Intelligent Accounts Receivable

- Billtrust announced major innovations in its Collections solution, incorporating advanced automation, AI-driven insights, and agentic AI workflows to enhance accounts receivable operations.

- New features include Agentic Email, Cases for dispute management, Credit Review for ongoing credit risk assessment, and Collections Analytics for real-time performance optimization.

- These innovations aim to streamline collections, resolve disputes faster, make smarter credit decisions, and deliver a better customer experience from a single platform.

- The CEO emphasized that Billtrust is delivering the future of collections with intelligent, automated, and customer-centric solutions, helping organizations modernize their operations and enhance performance.

Read Full Article

7 Likes

Global Fintech Series

329

Image Credit: Global Fintech Series

Wiv.ai Launches Game-Changer: The First FinOps Agent That Actually Takes Action

- Wiv.ai has launched Wivy, the First FinOps Agent that can take action, revolutionizing FinOps by enabling automated execution of operations powered by artificial intelligence.

- Wivy allows teams to perform tasks like identifying unattached EBS volumes, handling top tasks for the day, finding resources without proper tags, and recommending cost-saving rightsizing for instances.

- The agent integrates with major cloud platforms, communication tools, and management systems, adapting to organizations' specific workflows and processes.

- Wivy ensures privacy by not learning on customer data and allowing customers to use their own AI models, leading to improved response time, cost savings, task accuracy, and continuous operation for organizations.

Read Full Article

19 Likes

For uninterrupted reading, download the app