Hackernoon

1w

297

Image Credit: Hackernoon

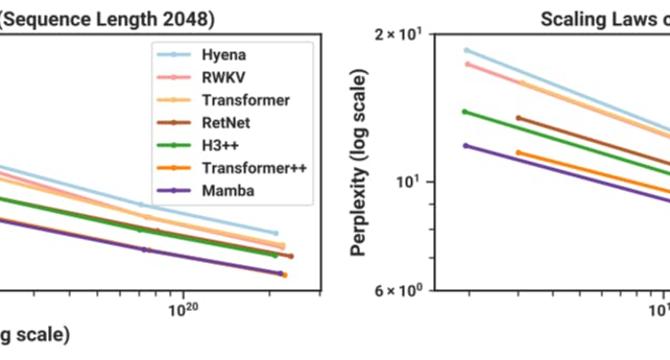

Mamba: A New Player in Language Modeling Outperforms Big Names

- Mamba, a new player in language modeling, has outperformed big names in the field.

- The Mamba architecture was evaluated against other architectures in autoregressive language modeling.

- Mamba matched the performance of a strong Transformer recipe, even as the sequence length increased.

- Mamba also demonstrated impressive performance on downstream zero-shot evaluation tasks.

Read Full Article

17 Likes

For uninterrupted reading, download the app