Medium

3w

8

Image Credit: Medium

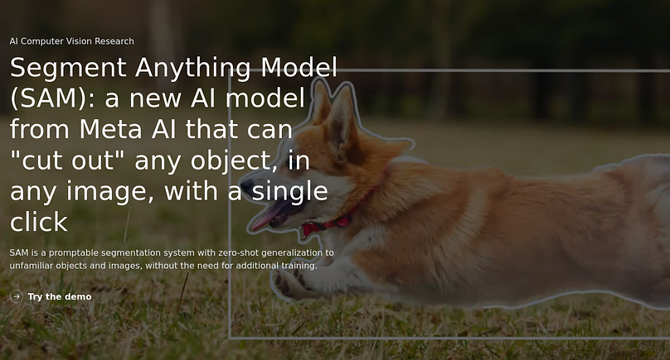

Meta AI’s Segment Anything Models (SAM & SAM2)

- The Segment Anything Model (SAM), developed by Meta AI, is a powerful vision foundation model for image segmentation.

- SAM can produce segmentation masks based on diverse inputs or prompts.

- The architecture of the Segment Anything Model (SAM) consists of three main components: Image Encoder, Prompt Encoder, and Mask Decoder.

- SAM can generalize across diverse tasks and domains without the need for task-specific fine-tuning.

- It outputs the top three masks at the part, sub-part and component level.

- SAM2 is poised to push the boundaries of computer vision by refining segmentation techniques.

- SAM represents a significant advancement in image segmentation, offering impressive flexibility, scalability, and zero-shot generalization across diverse tasks and domains.

- Its ability to process various types of prompts and deliver real-time results makes it a powerful tool for a wide range of applications.

- SAM2's limitations in handling complex scenes, domain-specific challenges, and computational demands highlight areas for future improvement.

- Balancing its strengths with refinements tailored to specialized applications will be crucial for maximizing its impact across diverse fields.

Read Full Article

Like

For uninterrupted reading, download the app