Medium

4w

249

Image Credit: Medium

Multi-gate-Mixture-of-Experts (MMoE) model architecture and knowledge distillation in Ads…

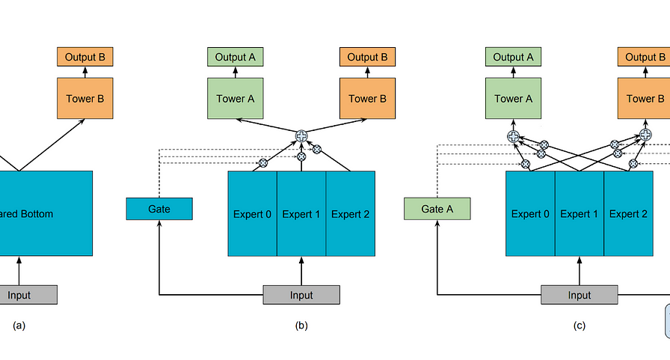

- Multi-gate Mixture-of-Experts (MMoE) model architecture is a powerful neural network model design that dynamically allocates resources to experts based on input, enhancing efficiency and specialization.

- MMoE promotes better generalization and performance by allowing the model to learn specialized features through various experts, improving pattern capturing and task accuracy.

- The model architecture suits complex tasks like ads-user matching and multitask learning, offering flexibility and efficiency in neural network modeling.

- Knowledge distillation is proposed to enhance model performance further by transferring knowledge from production models to experimental ones.

- Infrastructure cost reduction techniques for MMoE implementation include mixed precision inference and lightweight gate layers.

- Knowledge distillation helps experimental models learn from deleted data, improving offline and online performance, especially for ads engagement modeling.

- By leveraging MMoE architecture and knowledge distillation, Pinterest achieved significant improvements in offline and online metrics for engagement ranking.

- Collaboration across multiple Pinterest teams resulted in successful implementation of advanced model architectures and techniques for ad recommendations.

- MMoE, combined with knowledge distillation, proves effective in handling complex tasks and mitigating challenges related to short data retention periods in modeling.

- The collaboration and innovations showcased in this work reinforce Pinterest’s commitment to delivering personalized and inspiring recommendations for its users.

- The research demonstrates the effectiveness of MMoE and knowledge distillation in improving model performance and addressing data-related challenges in ad engagement modeling.

Read Full Article

12 Likes

For uninterrupted reading, download the app