Arstechnica

2d

244

Image Credit: Arstechnica

New Apple study challenges whether AI models truly “reason” through problems

- Apple researchers published a study suggesting that Simulated Reasoning models produce outputs consistent with pattern-matching, rather than true reasoning, when faced with novel problems.

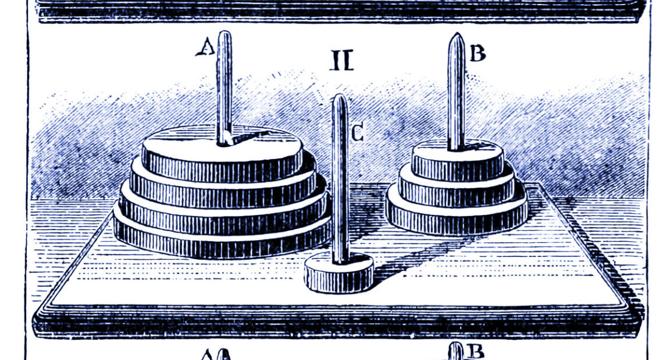

- The study, titled 'The Illusion of Thinking,' evaluated large reasoning models against classic puzzles of varying difficulties.

- Results showed that models struggled on tasks requiring extended systematic reasoning, achieving low scores on novel mathematical proofs.

- Critics like Gary Marcus found the results 'devastating' to Large Language Models (LLMs) and questioned their logical and intelligent processes.

- The study revealed that SR models behave differently from standard models depending on task difficulty, sometimes 'overthinking' and failing on complex puzzles.

- An identified scaling limit showed that reasoning models reduce their effort beyond a certain complexity threshold.

- Not all researchers agree with the study's interpretation, suggesting that limitations may reflect deliberate training constraints rather than inherent inabilities.

- Some critics argue that the study's findings may be measuring engineered constraints rather than fundamental reasoning limits.

- The Apple researchers caution against over-extrapolating the study's results, noting that puzzle environments may not capture the diversity of real-world reasoning problems.

- While the study challenges claims about AI reasoning models, it does not render these models useless, indicating potential uses for tasks like coding and writing.

Read Full Article

14 Likes

For uninterrupted reading, download the app