Medium

1M

438

Image Credit: Medium

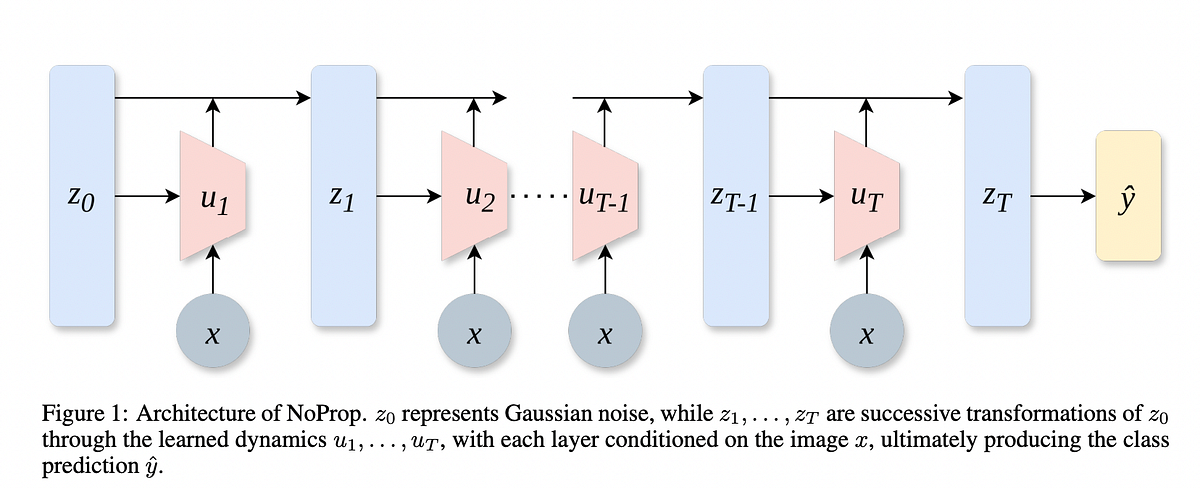

NoProp: The Bold Move to Train Neural Networks Without Forward or Backward Propagation

- A groundbreaking paper called 'NoProp' proposes training neural networks without forward or backward propagation.

- NoProp eliminates the traditional steps of forward and backward propagation, reducing memory usage, compute time, and complexity.

- The approach uses weight perturbation with selective feedback and shows potential for training deep networks in new ways.

- NoProp opens doors for alternative training paradigms and challenges conventional deep learning conventions.

Read Full Article

26 Likes

For uninterrupted reading, download the app