Medium

4w

236

Image Credit: Medium

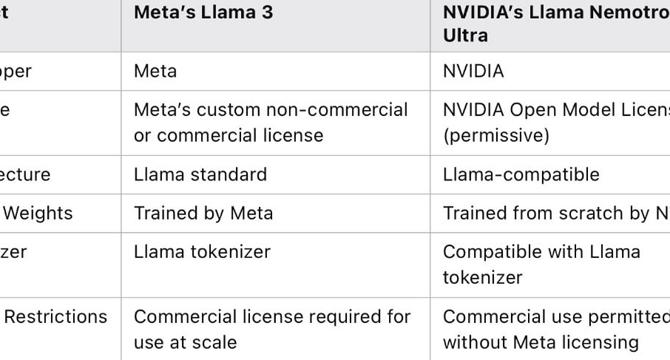

NVIDIA’s Llama Nemotron Ultra: Open, Powerful, and Enterprise-Ready AI With a Strategic Edge

- NVIDIA's Llama Nemotron Ultra addresses the shortcomings in advanced reasoning, multi-step logic, and structured decision-making of general-purpose LLMs.

- The model is open-source under the NVIDIA Open Model License, allowing commercial deployment, internal fine-tuning, and integration into products.

- Llama Nemotron Ultra offers deployment flexibility, including on-premises, in cloud environments, or hybrid setups.

- This model ensures data privacy by enabling companies to keep data in-house, comply with data protection laws, and avoid routing data through external APIs.

- NVIDIA reports superior performance of Llama Nemotron Ultra with higher inference throughput, top scores on reasoning benchmarks, and multi-GPU optimization.

- The model targets enterprises in finance, healthcare, government, and OEMs embedding AI into custom hardware, with a market focus on strict compliance industries.

- NVIDIA's strategy with Llama Nemotron Ultra involves providing open models, GPUs, cloud infrastructure, and model frameworks to stimulate hardware demand and support customers.

- Llama Nemotron Ultra aligns with NVIDIA's broader goal to own the AI compute stack, drive GPU infrastructure demand, promote open ecosystems, and support sovereign AI efforts globally.

- The model sets a new standard for open enterprise AI by offering advanced reasoning capabilities, privacy-compliance, and deployability in various settings.

- With its focus on enterprise-grade AI and strategic edge, Llama Nemotron Ultra emerges as a fundamental building block for the future of AI adoption.

- For businesses prioritizing control, compliance, and high performance in AI systems, Llama Nemotron Ultra represents a promising option in the evolving AI landscape.

Read Full Article

14 Likes

For uninterrupted reading, download the app