Medium

1w

210

Image Credit: Medium

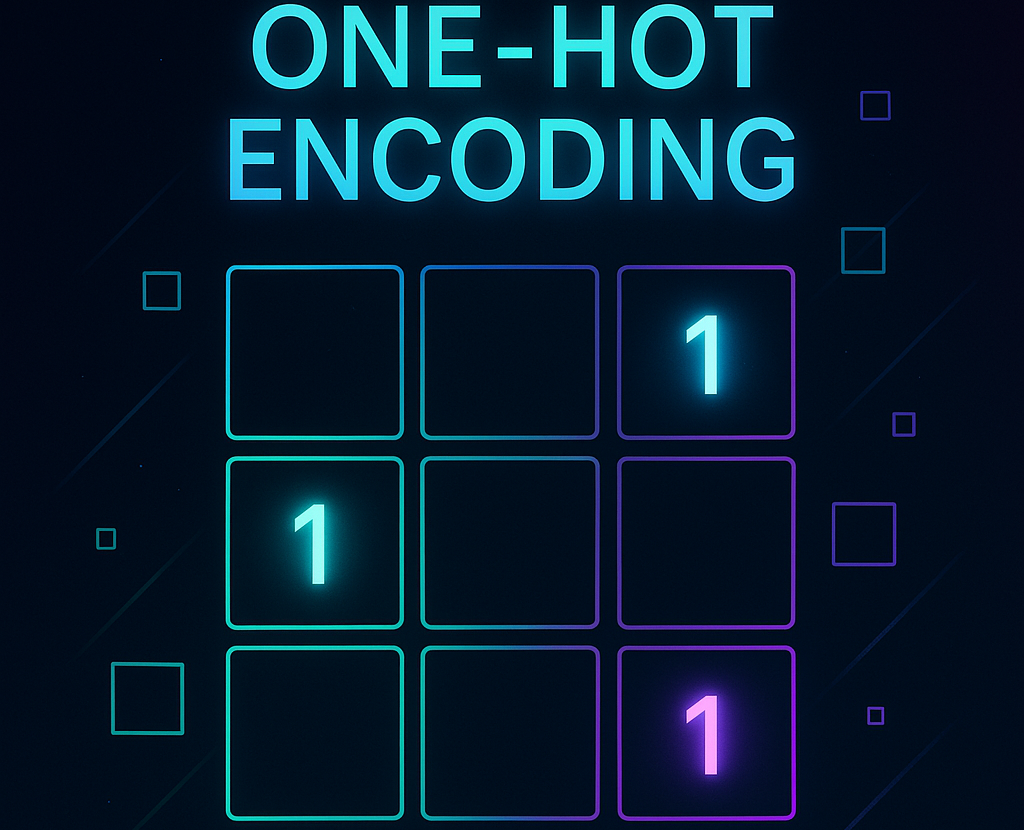

One-Hot Encoding in NLP: A Gentle Introduction

- One-hot encoding is a simple yet foundational method in NLP that converts categorical data, like words, into numerical format.

- It represents each word with a binary vector, where only one position is 'hot' (1) and the rest remain 'cold' (0).

- One-hot encoding is used to transform text data for NLP tasks such as sentiment analysis before feeding it into machine learning algorithms or deep neural networks.

- While one-hot encoding has limitations, it serves as an entry point to more advanced NLP techniques and provides valuable insight into language interpretation by machines.

Read Full Article

12 Likes

For uninterrupted reading, download the app