Medium

2w

394

Image Credit: Medium

Recurrent State Space Models — PyTorch implementation

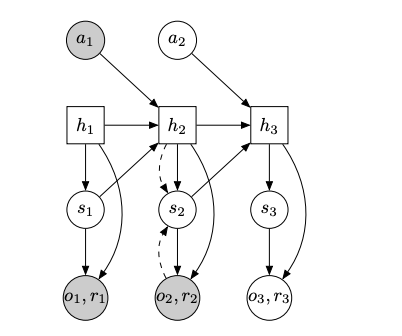

- Recurrent State Space Models (RSSM) are essential for model-based reinforcement learning (MBRL) approaches where reliable models are built to predict the environment’s dynamics, and agents use these models to simulate future trajectories and plan actions in advance.

- This article overviews how to implement and train RSSM models using PyTorch.

- A RSSM relies on several model architectures, such as the Encoder which is a simple CNN projecting the input image to a one-dimensional embedding with BatchNorm used to stabilize training.

- The decoder is a traditional autoencoder architecture mapping the encoded observation back to observation space.

- The reward model uses s and h to output the parameters for a normal distribution from which we can obtain a reward and consists of three layers.

- The dynamics model requires prior and posterior state transition models, which approximate the prior and posterior state distributions using one-layered FFNs and return mean and log-variance of the respective normal distribution from which we can sample the states s.

- The generate_rollout method calls the dynamics model and generates a rollout of latent representations of the environment dynamics.

- Two core components when training the model are the buffer and the agent. The buffer stores past experiences from which we can train RSSM model whereas the agent is the interface between the environment and the RSSM.

- The train method calls the train_batch method, which samples observations, actions, and rewards from the buffer.

- This article provides a general introduction to the implementation of RSSMs in PyTorch, which are powerful in generating future latent state trajectories recurrently, enabling agents to plan future actions.

Read Full Article

23 Likes

For uninterrupted reading, download the app