Hackernoon

1d

348

Image Credit: Hackernoon

Refining Jacobi Decoding for LLMs with Consistency-Based Fine-Tuning

- The article discusses a refined Jacobi decoding method for Large Language Models (LLMs) to improve efficiency and speed during inference.

- Existing methods like speculative decoding and Medusa have limitations, prompting the need for a more effective approach.

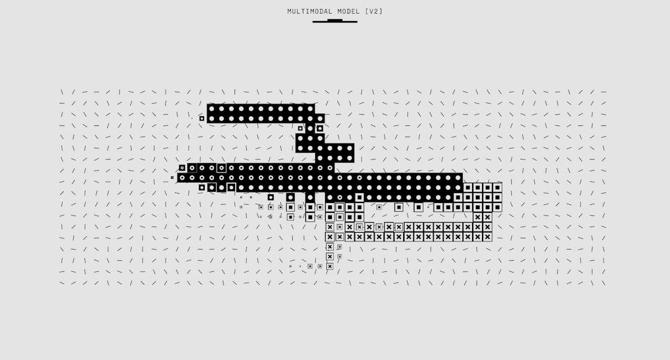

- Jacobi decoding method iteratively updates n-token sequences to converge to the output generated by autoregressive (AR) decoding.

- The proposed refinement aims to enhance LLMs to accurately predict multiple subsequent tokens with one step for faster convergence.

- The method involves training LLMs to map any state on the Jacobi trajectory to the fixed point efficiently.

- CLLMs (Consistency Large Language Models) are introduced, achieving significant speedup without additional memory costs.

- The fine-tuning process involves leveraging consistency loss and AR loss for improved generation quality and speed.

- Empirical results demonstrate 2.4× to 3.4× speed improvements in various benchmarks with CLLMs.

- CLLMs exhibit features like fast forwarding and stationary tokens, contributing to latency reduction and enhanced performance.

- The research presents CLLMs as a promising approach for optimizing LLM inference with minimal performance trade-offs.

Read Full Article

20 Likes

For uninterrupted reading, download the app