Medium

4w

408

Image Credit: Medium

ResNet Paper Explained

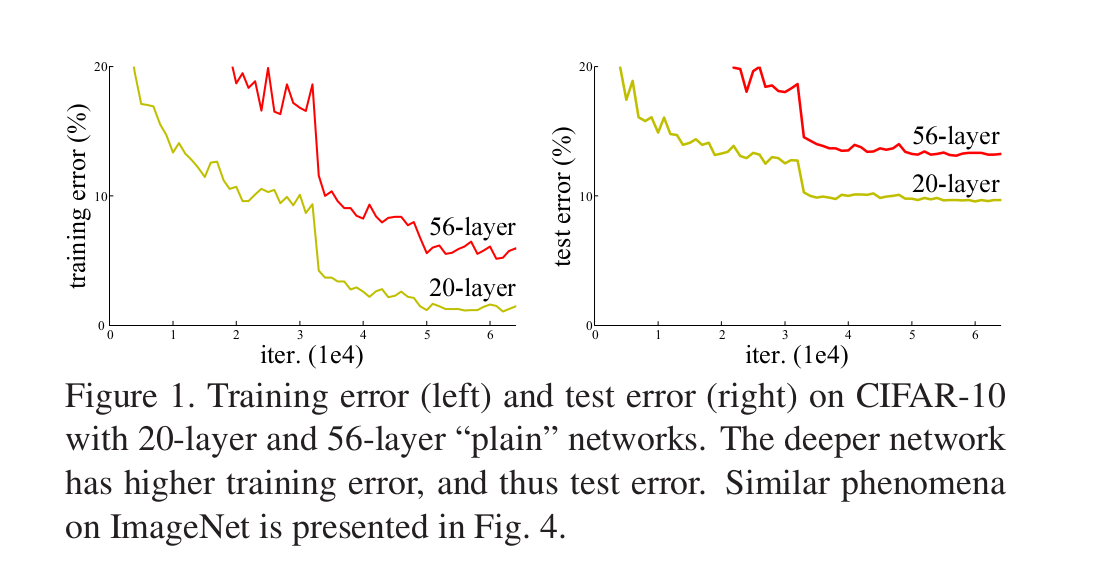

- The degradation problem occurs in neural networks as they get deeper, causing performance to deteriorate due to challenges like vanishing gradients and overfitting.

- Deeper networks are harder to train but are important for achieving leading results, like those on the ImageNet dataset.

- A solution to deeper models involves adding identity mapping layers copied from shallower models to prevent higher training errors.

- Learning identity mapping is difficult in neural networks with many nonlinear layers due to the challenges of preserving data perfectly.

- The degradation problem is addressed by introducing a deep residual learning framework in neural networks.

- ResNet introduces a residual connection where F(x) = H(x) - x, allowing the network to naturally learn the residual component to reach the desired output.

- PyTorch implementation of the residual block includes self.block(x) as the residual function and adds the original input back to get the final output.

- The loss function is computed based on the final output, optimizing the residual function F(x) to improve network performance.

Read Full Article

24 Likes

For uninterrupted reading, download the app