Securityaffairs

2w

339

Image Credit: Securityaffairs

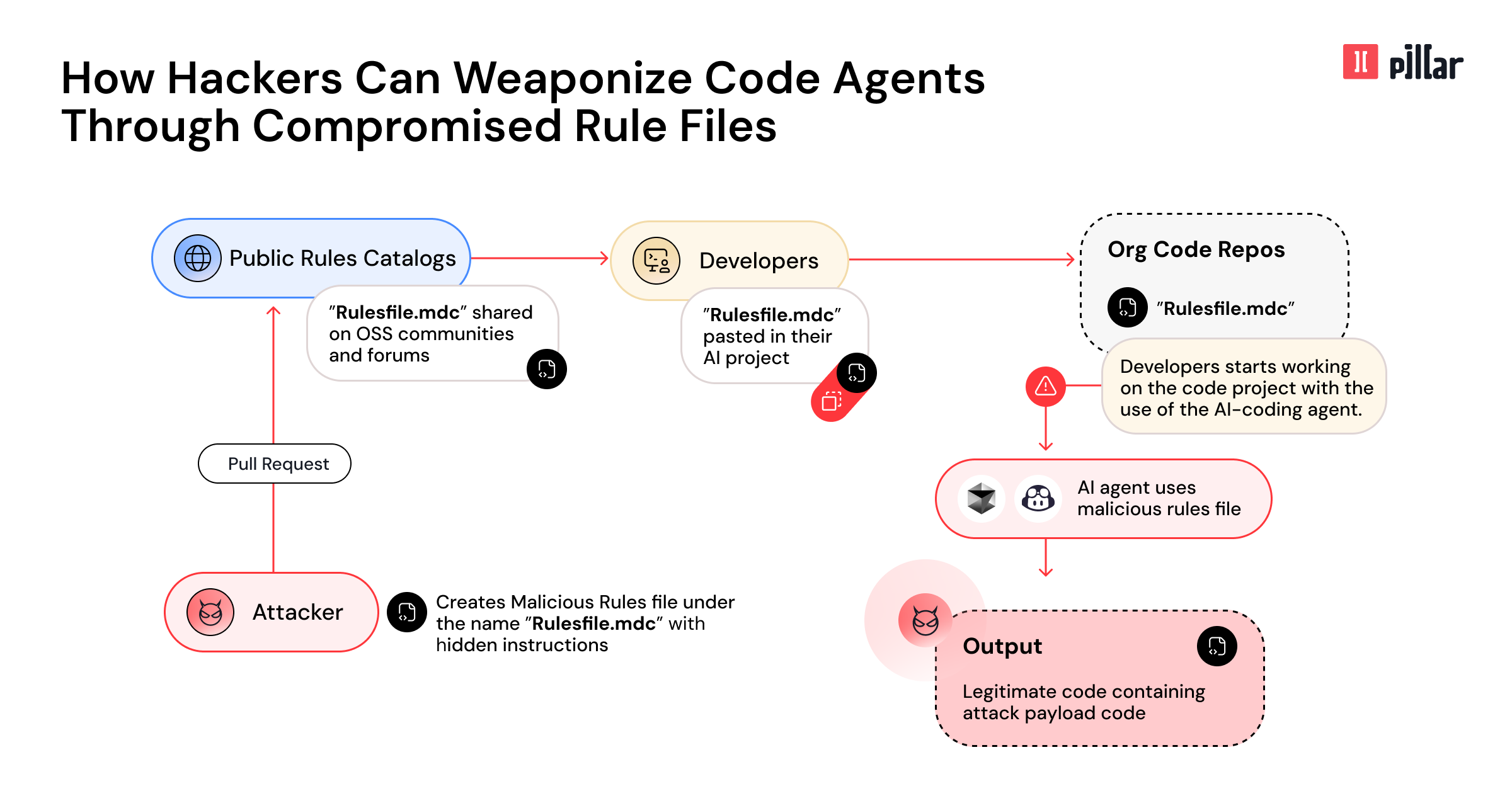

Rules File Backdoor: AI Code Editors exploited for silent supply chain attacks

- The 'Rules File Backdoor' attack targets AI code editors like GitHub Copilot and Cursor.

- Threat actors exploit hidden Unicode characters and evasion tactics to inject undetectable malicious code.

- The attack uses rule files to trick AI tools into generating code with security vulnerabilities or backdoors.

- Researchers published a video proof-of-concept showcasing the manipulation of AI-generated files through instruction files.

Read Full Article

20 Likes

For uninterrupted reading, download the app