Medium

3d

178

Image Credit: Medium

Safe & Secure Agentic AI: Insights from Prof. Dawn Song’s Lecture

- AI safety mechanisms must be resilient to adversarial attacks with the increasing complexity of AI systems.

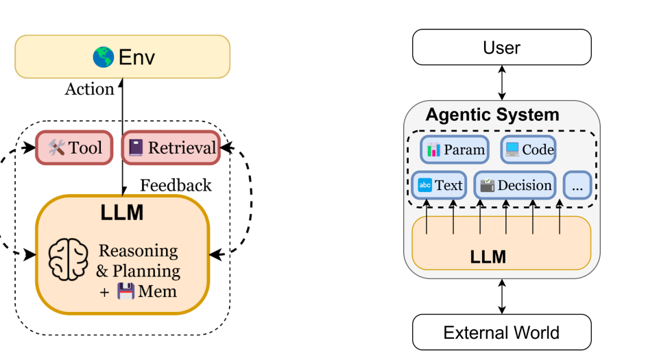

- The lecture by Prof. Dawn Song focused on the complexities and risks associated with Agentic AI, which combines traditional symbolic program components with non-symbolic neural components.

- Prof. Song highlighted vulnerabilities in the Agentic Workflow, emphasizing the potential pitfalls, especially with LLM-generated outputs as attack vectors.

- To secure agentic AI, a multi-layered approach with practical defence mechanisms is crucial, aiming to ensure responsible realization of AI benefits and widespread sharing.

Read Full Article

10 Likes

For uninterrupted reading, download the app