Medium

3w

193

Image Credit: Medium

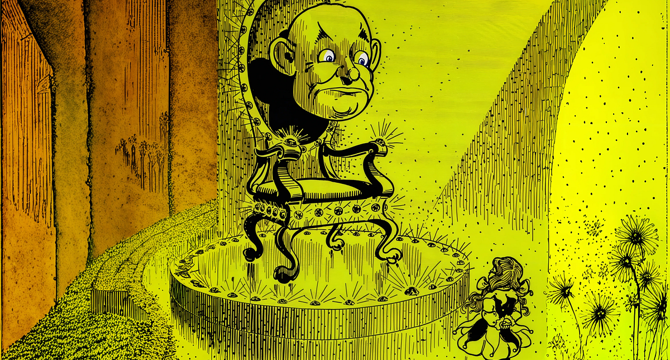

The secret life of AI operators

- An AI model processes input and produces an output in discrete steps, making it closer to a toaster than a living organism.

- AI models only produce probabilistic outputs that require software engineers to select the most desirable result.

- Agentic workflows allow for the integration of generative AI into program logic diagrams, but it still requires human feedback and guidance.

- AI will remain dependent on humans to understand what a 'good' output is for the foreseeable future.

- The interface of an AI product is important for human operators to efficiently operate the model.

- Successful AI implementations focus on designing a human-AI partnership rather than seeking to fully eliminate human operators.

- AI products require an explicit operator strategy, including whether operators will be remote employees, on-site staff, end users, or a combination of all three.

- A more nuanced understanding of the human-AI collaboration is necessary, and the myth of fully autonomous AI systems needs to be dispelled.

- Quantifying quality for machines is the next great frontier and is critical to creating AGI.

- Judging from how humans don’t agree on 'good' or 'bad', AI products will still require human operators in the foreseeable future.

Read Full Article

11 Likes

For uninterrupted reading, download the app