Medium

2d

99

Image Credit: Medium

Train Once Run Everywhere, Is it true...

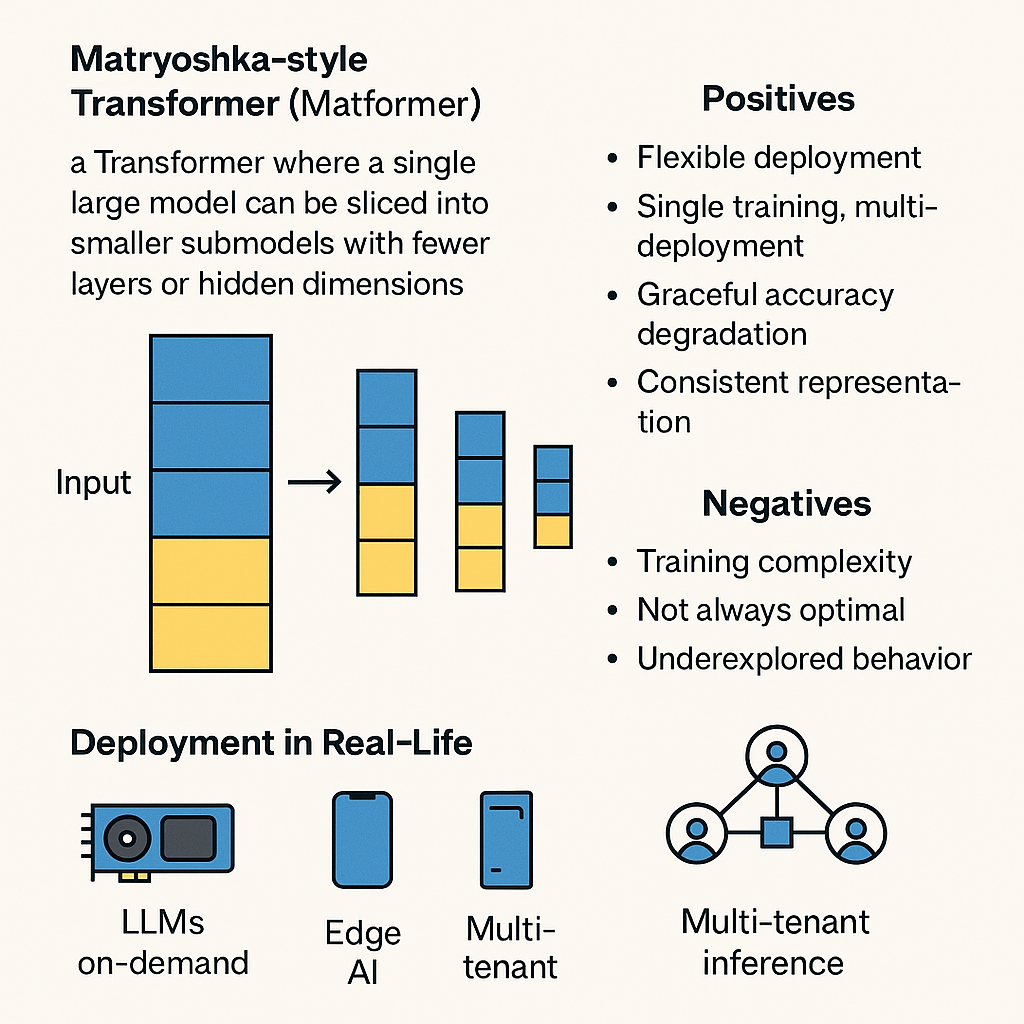

- Matformers, inspired by Matryoshka dolls, are Transformer models that can be sliced into smaller submodels for flexible deployment.

- Benefits of Matformers include flexible deployment options, single training for multiple deployments, graceful accuracy degradation, and consistent representation.

- Challenges with Matformers include training complexity, performance trade-offs for smaller slices, and unexplored behavior with various techniques.

- Real-world applications of Matformers include deploying different slices for high-end GPUs, on-device inference, edge AI, and multi-tenant inference, making model deployment more scalable.

Read Full Article

5 Likes

For uninterrupted reading, download the app