Medium

5d

232

Image Credit: Medium

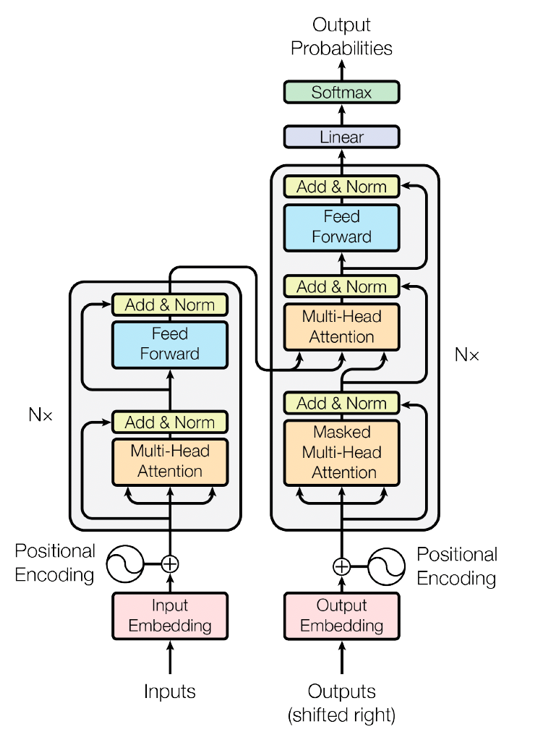

Transformers in Artificial Inteligence?

- Embeddings in AI convert words or data into numerical vectors for algorithms to understand relationships and context.

- Transformers use positional encoding to maintain the order and relationships between input tokens in natural language processing.

- In a Transformer model, transformer blocks work together using self-attention to understand contextual relationships between words.

- Transformers use linear layers and the softmax function to make predictions based on learned vector representations, turning complex patterns into clear outcomes.

Read Full Article

14 Likes

For uninterrupted reading, download the app