Medium

7d

332

Image Credit: Medium

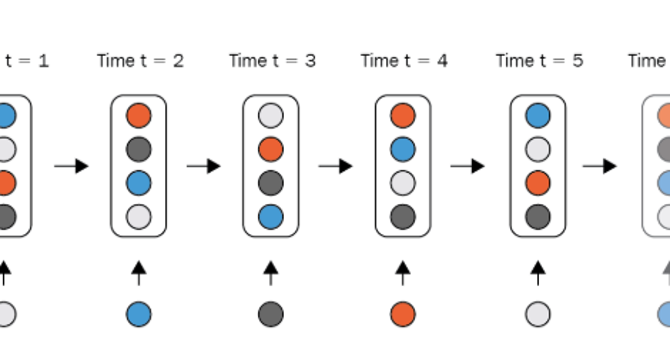

Understanding Activation Functions and the Vanishing Gradient Problem

- Neural networks face the vanishing gradient problem as gradients become very small during backpropagation through many layers.

- Activation functions like Sigmoid and Tanh can lead to gradients close to zero, hindering weight updates in earlier layers.

- Choosing the right activation function is crucial for the performance of deep learning models, with options like ReLU, Leaky ReLU, and Softmax addressing the vanishing gradient issue.

- Understanding activation functions and their impact can assist in designing more effective and accurate neural networks.

Read Full Article

19 Likes

For uninterrupted reading, download the app